For 30 years, Furtherfield has pioneered the critical imagination of art, technology, and networked cultures. In this time dominant global actors have created and imposed systems that support contemporary life for some at the same time as poisoning our planetary-wide environment and societies at an escalating rate. There is an urgent need to adopt the principles of less, again and differently, in a fair and equitable way. Seeking to cause less harm. Acting again on the knowledge that has been available (if ignored or downplayed) for decades. Acting differently in response to emerging knowledge, ways of knowing, being and feeling. Understanding our work in this way, how it exists within a living system, is one way of accepting how we are all material beings situated within vast chains of consequence, enmeshed within larger ones. This requires a different standard of responsibilities, a way to be responsive and accountable in a changing world working to address the uneven impacts on people, creatures, and places that may be geographically remote from us, but which nevertheless bear the cost of our actions.

In 2025 we shift the main focus of our work from our long-standing gallery in Finsbury Park, North London to a mid-sized town on Suffolk’s East coast. With a population of 24,000, Felixstowe town is our new home, where we will join existing communities, cultural partners, ecosystems, and species. This transition is a modal shift for the organisation and means we are doing things differently. In Felixstowe, we begin a new journey of becoming an embedded and community-needs-led art organisation focused on art, technology, and eco-social change. Eco-social change is systemic transformation, integrating social justice and ecology— to create fairer, more resilient futures for all.

With this move we commit to being in relation to communities, contexts, and biodiverse regions that are entirely new to us. In creating this policy we hope not only to “move to” but to “move with” our new location, developing different relationships with ourselves, each other, and the land, at the same time composting and letting go of existing practices and connections that harm. This environmental action plan therefore refers to listening, learning, flexibility, agility, stamina, synchronisation, and interpersonal mobility that will be required.

Our first environment action is to sign up to Culture Declares Emergency. Our second has been to develop an environmental policy for the next three years. Our third will be to make two climate pledges by the end of year one.

“We declare that the Earth’s life-supporting systems are in collapse, threatening biodiversity and human societies everywhere.

Alive to the beauty of our planet, we unite to challenge the dominant global power structures that fail to protect us as they disregard scientific consensus, silence marginalised voices and perpetuate ecocide.

As Declarers, we take action to harness the power of arts and culture to express heartfelt truths and address deep-rooted injustices, to care for and create adaptive, resilient and joyful communities, and to influence the urgent and necessary transformation of harmful global systems.”

On creating this policy, we :

In this new phase, we are committed to

For its closing community gathering of the year, the Disruption Network Lab organised a conference to extend and connect its 2019 programme ‘The Art of Exposing Injustice’ – with social and cultural initiatives, fostering direct participation and enhancing engagement around the topics discussed throughout the year. Transparency International Deutschland, Syrian Archive, and Radical Networks are some of the organisations and communities that have taken part on DNL activities and were directly involved in this conference on November the 30th, entitled ‘Activation: Collective Strategies to Expose Injustice’ on anti-corruption, algorithmic discrimination, systems of power, and injustice – a culmination of the meet-up programme that ran parallel to the three conferences of 2019.

The day opened with the talk ‘Untangling Complexity: Working on Anti-Corruption from the International to the Local Level,’ a conversation with Max Heywood, global outreach and advocacy coordinator for Transparency International, and Stephan Ohme, lawyer and financial expert from Transparency International Deutschland.

In the conference ‘Dark Havens: Confronting Hidden Money & Power’ (April 2019) – DNL focused its work on offshore financial systems and global networks of international corruption involving not only secretive tax havens, but also financial institutions, systems of law, governments and corporations. On the occasion, DNL hosted discussions about the Panama Papers and other relevant leaks that exposed hundreds of cases involving tax evasion, through offshore regimes. With the contribution of whistleblowers and people involved in investigations, the panels unearthed how EU institutions turn a blind eye to billions of Euros worth of wealth that disappears, not always out of sight of local tax authorities, and on how – despite, the global outrage caused by investigations and leaks – the practice of billionaires and corporations stashing their cash in tax havens is still very common.

Introducing the talk ‘Untangling Complexity,’ Disruption Network community director Lieke Ploeger asked the two members of Transparency International and its local chapter Transparency International Deutschland to touch base after a year-long cooperation with the Lab, in which they have been substantiating how, in order to expose and defeat corruption, it is necessary to make complexity transparent and simple. With chapters in more than 100 countries and an international secretariat in Berlin, Transparency International works on anti-corruption at an international and local level through a participated global activity, which is the only effective way to untangle the complexity of the hidden mechanisms of international tax evasion and corruption.

Such crimes are very difficult to detect and, as Heywood explained, transparency is too often interpreted as simple availability of documents and information. It requires instead a higher degree of participation since documents and information must be made comprehensible, singularly and in their connections. In many cases, corruption and illegal financial activities are shielded behind technicalities and solid legal bases that make them hard to be uncovered. Within complicated administrative structures, among millions of documents and terabytes of files, an investigator is asked to find evidence of wrongdoings, corruption, or tax evasion. Most of the work is about the capability to put dots together, managing to combine data and metadata to define a hidden structure of power and corruption. Like in a big puzzle, all pieces are connected. But those pieces are often so many, that just a collective effort can allow scrutiny. That is why a law that allows transparency in Berlin, on estate properties and private funds, for example, might be able to help in a case of corruption somewhere else in the world. Exactly like in the financial systems, also in anti-corruption, nothing is just local and the cooperation of more actors is essential to achieve results.

The recent case of the Country-by-Country Reporting shows the situation in Europe. It was an initiative proposed in the ‘Action Plan for Fair and Efficient Corporate Taxation‘ by the European Commission in 2015. It aimed at amending the existing legislation to require multinational companies to publicly disclose their income tax in each EU member state they work in. Not many details are supposed to be disclosed and the proposal is limited only to companies with a turnover of at least €750 million, to know how much profit they generate and how much tax they pay in each of the 28 countries. However, many are still reluctant to agree, especially those favouring the profit-shifting within the EU. Some, including Germany, worry that revealing companies’ tax and profit information publicly will give a competitive advantage to companies outside Europe that don’t have to report such information. Twelve countries voted against the new rules, all member states with low-tax environments helping to shelter the profits of the world’s biggest companies. Luxembourg is one of them. According to the International Monetary Fund – through its 600,000 citizens – the country hosts as much foreign direct investment as the USA, raising the suspicion that most of this flow goes to “empty corporate shells” designed to reduce tax liabilities in other EU countries.

Moreover, in every EU country, there are voices from the industrial establishment against this proposal. In Germany, the Foundation of Family Businesses, which despite its name guarantees the interests of big companies, as Ohme remarked, claims that enterprises are already subject to increasingly stronger social control through the continuously growing number of disclosure requirements. It complains about what is considered the negative consequences of public Country-by-Country Reporting for their businesses, stating that member states should deny their consent as it would considerably damage companies’ competitiveness, and turn the EU into a nanny state. But, apart from the expectations and the lobbying activities of the industrial élite, European citizens want multinational corporations to pay fair taxes on EU soil where the money is generated. The current fiscal regimes increase disparities, allow profit-shifting and bank secrecy. The result is that most of the fiscal burden push against less mobile tax-payers, retirees, employees, and consumers, whilst corporations and billionaires get away with their misconducts.

Transparency International encourages citizens all over the globe to carry on asking for accountability and improvements in their financial and fiscal systems without giving up. In 1997, the German government made bribes paid to foreign officials by German companies tax-deductible, and until February 1999 German companies were allowed to bribe in order to do business across the border, which was common practice, particularly in Asia and Latin America since at least the early 70s. But things have changed. Ohme is aware of the many daily scandals related to corruption and tax evasion: for this reason he considers the work of Transparency International necessary. However, he invited his audience not to describe it as a radical organisation, but as an independent one that operates on the basis of research and objective investigations.

In the last months of 2019 in Germany, the so-called Cum-Ex scandal caught the attention of international news outlets as investigators discovered a trading scheme exploiting a tax loophole on dividend payments within the German tax code. Authorities allege bankers helped investors reap billions of euros in illegitimate tax refunds, as Cum-Ex deals involved a trader borrowing a block of shares to bet against them, and then selling them on to another investor. In the end, parties on both sides of the trade could claim a refund of withholding taxes paid on the dividend, even though prosecutors contend that only a single rebate was actually due. The loophole was closed in 2012, but investigators think that in the meantime companies like Freshfields advised many banks and other participants in the financial markets to illegally profit from it.

As both Heywood and Ohme stressed, we need measures that guarantee open access to relevant information, such as the beneficial owners of assets which are held by entities, and arrangements like shell companies and trusts – that is to say, the info about individuals who ultimately control or profit from a company or estate. Experts indicate that registers of beneficial owners help authorities prosecute criminals, recover stolen assets, and deter new ones; they make it harder to hide connections to illicit flows of capital out of a national budget.

Referring to the case of the last package of measures regarding money laundering and financial transparency, under approval by the German parliament, Ohme showed a shy appreciation for the improvements, as real estate agents, gold merchants, and auction houses will be subject to tighter regulations in the future. Lawmakers complained that the US embassy and Apple tried to quash part of these new rules and that during the parliamentary debate they sought to intervene with the Chancellery to prevent a section of the law from being adopted. The attempt was related to a regulation which forces digital platforms to open their interfaces for payment services and apps, such as the payment platform ApplePay, but it did not land. Apple’s behaviour is a sign of the continuous interferences of the interests at stake when these topics are discussed.

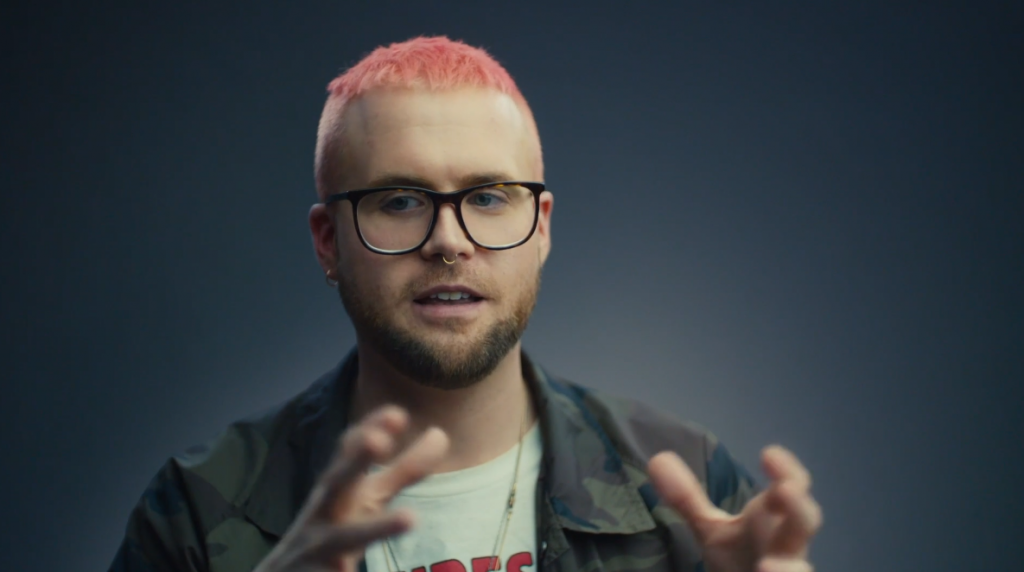

At the end of the first talk, DNL hosted a screening of the documentary ‘Pink Hair Whistleblower’ by Marc Silver. It is an interview with Christopher Wylie, who worked for the British consulting firm Cambridge Analytica, who revealed how it was built as a system that could profile individual US voters in 2014, to target them with personalised political advertisements and influence the results of the elections. At the time, the company was owned by the hedge fund billionaire Robert Mercer and headed by Donald Trump’s key advisor, and architect of a far-right network of political influence, Steve Bannon.

The DNL discussed this subject widely within the conference ‘Hate News: Manipulators, Trolls & Influencers’ (May 2018), trying to define the ways of pervasive, hyper-individualized, corporate-based, and illegal harvesting of personal data – at times developed in partnership with governments – through smartphones, computers, virtual assistants, social media, and online platforms, which could inform almost every aspect of social and political interactions.

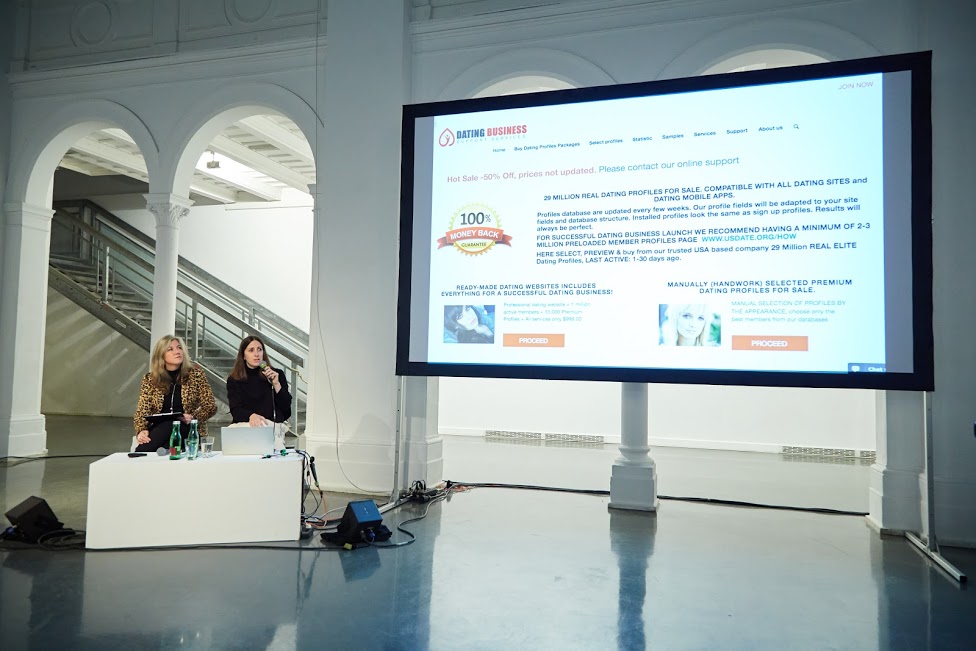

With the overall theme ‘AI Traps: Automating Discrimination‘ (June 2019), DNL sought to define how artificial intelligence and algorithms reinforce prejudices and biases in society. These same issues were raised in the Activation conference, in the talk ‘An Autopsy of Online Love, Labour, Surveillance and Electricity/Energy.’ Joana Moll, artist and researcher, in conversation with DNL founder Tatiana Bazzichelli, presented her latest projects ’The Dating Brokers’ and ‘The Hidden Life of an Amazon User,’ on the hidden side of IT-interface and data harvesting.

The artist’s work moves from the challenges of the so-called networked society to a critique of social and economic practices of exploitation, which focuses on what stands behind the interface of technology and IT services, giving a visual representation of what is hidden. The fact that users do not see what happens behind the online services they use has weakened the ability that individuals and collectives have to define and protect their privacy and self-determination, getting stuck in traps built to get the best out of their conscious or unconscious contribution. Moll explains that, although most people’s daily transactions are carried out through electronic devices, we know very little of the activities that come with and beyond the interface we see and interact with. We do not know how the machine is built, and we are mostly not in control of its activities.

Her project ‘The Dating Brokers’ focuses on the current practices in the global online dating ecosystem, which are crucial to its business model but mostly opaque to its users. In 2017, Moll purchased 1 million online dating profiles from the website USDate, a US company that buys and sells profiles from all over the world. For €136, she obtained almost 5 million pictures, usernames, email addresses, details about gender, age, nationality, and personal information such as sexual orientation, private interests, profession, physical characteristics, and personality. Analysing few profiles and looking for matches online, the artist was able to define a vast network of companies and dating platforms capitalising on private information without the consent of their users. The project is a warning about the dangers of placing blind faith in big companies and raises alarming ethical and legal questions which urgently need to be addressed, as dating profiles contain intimate information on users and the exploitation and misuse of this data can have dramatic effects on their lives.

With the ongoing project ‘The Hidden Life of an Amazon User,’ Moll attempts to define the hidden side of interfaces. The artist documented what happens in the background during a simple order on the platform Amazon. Purchasing the book ‘The Life, Lessons & Rules for Success’ by Amazon founder Jeff Bezos her computer was loaded with so many scripts and requests, that she could trace almost 9,000 pages of lines of code as a result of the order and more than 87 megabytes of data running in the background of the interface. A large part of the scripts are JavaScript files, that can theoretically be employed to collect information, but it is not possible to have any idea of what each of these commands meant.

With this project, Moll describes the hidden aspects of a business model built on the monitoring and profiling of customers that encourages them to share more details, spend more time online, and make more purchases. Amazon and many other companies aggressively exploit their users as a core part of their marketing activity. Whilst buying something, users provide clicks and data for free and guarantee free labour, whose energy costs are not on the companies’ bills. Customers navigate through the user interface, as content and windows constantly load into the browser to enable interactions and record user’s activities. Every single click is tracked and monetized by Amazon, and the company can freely exploit external free resources, making a profit out of them.

The artist warns that these hidden activities of surveillance and profiling are constantly contributing to the release of CO2. This due to fact that a massive amount of energy is required to load the scripts on the users’ machine. Moll followed just the basic steps necessary to get to the end of the online order and buy the book. More clicks could obviously generate much more background activity. A further environmental cost that customers of these platforms cannot decide to stop. This aspect shall be considered for its broader and long term implications too. Scientists predict that by 2025 the information and communications technology sector might use 20 per cent of all the world’s electricity, and consequently cause up to 5.5 per cent of global carbon emissions.

Moll concluded by saying we can hope that more and more individuals will decide to avoid certain online services and live in a more sustainable way. But, trends show how a vast majority of people using these platforms and online services, are harmful, because of their hidden mechanisms, affecting people’s lives, causing environmental and socio-economic consequences. Moll suggested that these topics should be approached at the community level to find political solutions and countermeasures.

The 17th conference of the Disruption Network Lab, ‘Citizens of Evidence’ (September 2019,) was meant to explore the investigative impact of grassroots communities and citizens engaged to expose injustice, corruption, and power asymmetries. Citizen investigations use publicly available data and sources to autonomously verify facts. More and more often ordinary people and journalists work together to provide a counter-narrative to the deliberate disinformation spread by news outlets of political influence, corporations, and dark money think-tanks. In this Activation conference, in a talk moderated by Nada Bakr, the DNL project and community manager, Hadi Al Khatib, founder and Director of ’The Syrian Archive’, and artist and filmmaker Jasmina Metwaly, altogether focused on the role of open archives in the collaborative production of social justice.

The Panel ‘Archives of Evidence: Archives as Collective Memory and Source of Evidence’ opened with Jasmina Metwaly, member of Mosireen, a media activist collective that came together to document and spread images of the Egyptian Revolution of 2011. During and after the revolution, the group produced and published over 250 videos online, focusing on street politics, state violence, and labour rights; reaching millions of viewers on YouTube and other platforms. Mosireen, who in Arabic recalls a pun of the words “Egypt” and “Determination” which could be translated as “we are determined,” has been working since its birth on collective strategies to allow participation and channel the energies and pulses of the 2011 protesters into a constructive discourse necessary to keep on fighting. The Mosireen activists organised street screenings, educational workshops, production facilities, and campaigns to raise awareness on the importance of archives in the collaborative production of social justice.

In January 2011, the wind of the Tunisian Revolution reached Egyptians, who gathered in the streets to overthrow the dictatorial system. In the central Tahrir Square in Cairo, for more than three weeks, people had been occupying public spaces in a determined and peaceful protest to get social and political change in the sense of democracy and human rights enhancement.

For 5 years, since 2013, the collective has put together the platform ‘858: An Archive of Resistance’ – an archive containing 858 hours of video material from 2011, where footage is collected, annotated, and cross-indexed to be consulted. It was released on 16th January 2018, seven years after the Egyptian protests began. The material is time-stamped and published without linear narrative, and it is hosted on Pandora, an open-source tool accessible to everybody.

The documentation gives a vivid representation of the events. There are historical moments recorded at the same time from different perspectives by dozens of different cameras; there are videos of people expressing their hopes and dreams whilst occupying the square or demonstrating; there is footage of human rights violations and video sequences of military attacks on demonstrators.

In the last six years, the narrative about the 2011 Egyptian revolution has been polluted by revisionisms, mostly propaganda for the government and other parties for the purposes of appropriation. In the meantime, Mosireen was working on the original videos from the revolution, conscious of the increasing urgency of such a task. Memory is subversive and can become a tool of resistance, as the archive preserves the voices of those who were on the streets animating those historical days.

Thousands of different points of views united compose a collection of visual evidence that can play a role in preserving a memory of events. The archive is studied inside universities and several videos have been used for research on the types of weapons used by the military and the police. But what is important is that people who took part in the revolution are thankful for its existence. The archive appears as one of the available strategies to preserve people’s own narratives of the revolution and its memories, making it impermeable to manipulations. In those days and in the following months, Egypt’s public spaces were places of political ferment, cultural vitality, and action for citizens and activists. The masses were filled with creativity and rebellion. But that identity is at risk to disappear. That kind of participation and of filming is not possible anymore; public spaces are besieged. The archive cannot be just about preserving and inspiring. The collective is now looking for more videos and is determined to carry on its work of providing a counter-narrative on Egyptian domestic and international affairs, despite tightened surveillance, censorship, and hundreds of websites blocked by the government.

There are many initiatives aiming to resist forgetting facts and silencing independent voices. In 2019, the Disruption Network Lab worked on this with Hadi Al Khatib, founder and director of ‘The Syrian Archive,’ who intervened in this panel within the Activation conference. Since 2011, Al Khatib has been working on collecting, verifying, and investigating citizen-generated data as evidence of human rights violations committed by all sides in the Syrian conflict. The Syrian Archive is an open-source platform that collects, curates, and verifies visual documentation of human rights violations in Syria – preserving data as a digital memory. The archive is a means to establish a verified database of facts and represents a tool to collect evidence and objective information to put an order within the ecosystem of misinformation and the injustices of the Syrian conflict. It also includes a database of metadata information to contextualise videos, audios, pictures, and documents.

Such a project can play a central role in defining responsibilities, violations, and misconducts, and could contribute to eventual post-conflict juridical processes since the archive’s structure and methodology is supposed to meet international standards. The Syrian conflict is a bloody reality involving international actors and interests which is far from being over. International reports in 2019 indicate at least 871 attacks on vital civilian facilities with the deaths of 3,364 civilians, where one in four were children.

The platform makes sure that journalists and lawyers are able to use the verified data for their investigations and criminal case building. The work on the videos is based on meticulous attention to details, and comparisons with official sources and publicly available materials such as photos, footage, and press releases disseminated online.

The Syrian activist and archivist explained that a lot of important documents could be found on external platforms, like YouTube, that censor and erase content using AI under pressures to remove “extremist content,” purging vital human rights evidence. Social media has been recently criticized for acting too slowly when killers live-stream mass shootings, or when they allow extremist propaganda within their platforms.

DNL already focused on the consequences of automated removal, which in 2017 deleted 10 per cent of the archives documenting violence in Syria, as artificial intelligence detects and removes content – but an automated filter can’t tell the difference between ISIS propaganda and a video documenting government atrocities. The Google-owned company has already erased 200,000 videos with documental and historical relevance. In countries at war, the evidence captured on smartphones can provide a path to justice, but AI systems often mark them as inadequate violent content which consequently erases them.

Al Khatib launched a campaign to warn platforms to fix and improve their content moderation systems used to police extremist content, and to consider when they define their measures to fight misinformation and crimes, aspects like the preservation of the common memory on relevant events. Twitter, for example, has just announced a plan to remove accounts which have been inactive for six months or longer. As Al Khatib explains, this could result in a significant loss to the memory of the Syrian conflict and of other war zones, and cause the loss of evidence that could be used in justice and accountability processes. There are users who have died, are detained, or have lost access to their accounts on which they used to share relevant documents and testimonies.

In the last year, the Syrian Archive platform was replicated for Yemen and Sudan to support human rights advocates and citizen journalists in their efforts to document human rights violations, developing new tools to increase the quality of political activism, future prosecutions, human rights reporting and research. In addition to this, the Syrian Archive often organises workshops to present its research and analyses, such as the one in October within the Disruption Network Lab community programme.

The DNL often focuses on how new technologies can advance or restrict human rights, sometimes offering both possibilities at once. For example, free open technologies can significantly enhance freedom of expression by opening up communication options; they can assist vulnerable groups by enabling new ways of documenting and communicating human rights abuses. At the same time, hate speech can be more readily disseminated, technologies for surveillance purposes are employed without appropriate safeguards and impinge unreasonably on the privacy of individuals; infrastructures and online platforms can be controlled to chase and discredit minorities and free speakers. The last panel discussion closing the conference was entitled ‘Algorithmic Bias: AI Traps and Possible Escapes’, moderated by Ruth Catlow, who took the floor to introduce the two speakers and asked them to debate effective ways to define this issue and discuss possible solutions.

Ruth Catlow is co-founder and co-artistic director of Furtherfield, an art gallery in London’s Finsbury Park – home for artworks, labs, and debates based on playful collaborative art research experiences, always across distances and differences. Furtherfield diversifies the people involved in shaping emerging technologies through an arts-led approach, always looking at ways to disrupt network power of technology and culture, to engage with the urgent debates of our time and make these debates accessible, open, and participated. One of its latest projects focused on algorithmic food justice, environmental degradation, and species decline. Exploring how new algorithmic technologies could be used to create a fairer and more sustainable food system, Furtherfield worked on solutions in which culture comes before structures, and human organisation and human needs – or the needs of other living beings and living systems – are at the heart of design for technological systems.

As Catlow recalled, in the conference ‘AI Traps: Automating Discrimination’ (June 2019), the Disruption Network Lab focused on the possible countermeasures to the AI-informed decision-making potential for racial bias and reinforced through AI decision-making tools. It was an inspiring and stimulating event on inclusion, education, and diversity in tech, highlighting how algorithms are not neutral and unbiased. On the contrary, they often reflect, reinforce, and automate the current and historical biases and inequalities of society, such as social, racial, and gender prejudices. The panel within the Activation conference framed these issues in the context of the work by the speakers, Caroline Sinders and Sarah Grant.

Sinders is a machine learning design researcher and artist. In her work, she focuses on the intersections of natural language processing, artificial intelligence, abuse, online harassment, and politics in digital and conversational spaces. She presented her last study on the Intersectional Feminist AI, focusing on labour and automated computer operations.

Quoting Hyman (2017), Sinders argued that the world is going through what some are calling a Second Machine Age, in which the re-organisation of people matters as much as, if not more than, the new machines. Employees receiving a regular wage or salary have begun to disappear, replaced by independent contractors and freelancers; remuneration is calculated on the basis of time worked, output, or piecework, and paid to employees for hours worked. Labour and social rights conquered with hard, bloody fights in the last two centuries seem to be irrelevant. More and more tasks are operated through AI, which plays a big role in the revenues of big corporations. But still, machine abilities are possible just with the fundamental contribution of human work.

Sinders begins her analyses considering that human labour has become hidden inside of automation, but is still integral to that. The training of machines is a process in which human hands touch almost every part of the pipeline, making decisions. However, people who train data models are underpaid and unseen inside of this process. As Thomas Thwaites’ toaster project, a critical design project in which the artist built a commercial toaster from scratch – melting iron and building circuits and creating a new plastic shell – Sinders analyses the Artificial Intelligence economy under the lens of feminist, intersectionalism, to define how and to which extent it is possible to create an AI that respects in all its steps the principles of non-exploitation, non-bias, and non-discrimination.

Her research considers the ‘Mechanical Turks’ model, in which machines masquerade as a fully automated robot but are operated by a human. Mechanical Turk is actually a platform run by Amazon, where people execute computer-like tasks for a few cents, synonymous with low-paid digital piecework. A recent research analysed nearly 4 million tasks on Mechanical Turk performed by almost 3,000 workers found that those workers earned a median wage of about $2 an hour, whilst only 4% of workers on Mechanical Turk earned more than $7,25 an hour. Since 2005 this platform has flourished. Mechanical Turks are used to train AI systems online. Even though it is mostly systematised factory jobs, this labour falls under the gig economy, so that people employed as Mechanical Turks are considered gig workers, who have no paid breaks, holidays, and guaranteed minimum wage.

Sinder concluded that an ethical, equitable, and feminist environment is not achievable within a process based on the competition among slave labourers that discourages unions, pays a few cents per repetitive task and creates nameless and hidden labour. Such a process shall be thoughtful and critical in order to guarantee the basis for equity; it must be open to feedback and interpretation, created for communities and as a reflection of those communities. To create a feminist AI, it is necessary to define labour, data collection, and data training systems, not just by asking how the algorithm was made, but investigating and questioning them from an ethical standpoint, for all steps of the pipeline.

In her talk Grant, founder of Radical Networks, a community event and art festival for critical investigations and creative experiments around networking technology, described the three main planes online users interact with, where injustices and disenfranchisement can occur.

The first one is the control plane, which refers to internet protocols. It is the plumbing, the infrastructure. The protocol is basically a set of rules which governs how two devices communicate with each other. It is not just a technical aspect, because a protocol is a political action which basically involves exerting control over a group of people. It can also mean making decisions for the benefit of a specific group of people, so the question is our protocols but our protocols political.

The Internet Engineering Task Force (IETF) is an open standards organisation, which develops and promotes voluntary Internet standards, in particular, the standards that comprise the Internet protocol suite (TCP/IP). It has no formal membership roster and all participants and managers are volunteers, though their activity within the organisation is often funded by their employers or sponsors. The IETF was initially supported by the US government, and since 1993 has been operating as a standards-development function under the international membership-based non-profit organisation Internet Society. The IETF is controlled by the Internet and Engineering Steering Group (IESG), a body that provides final technical review of the Internet standards and manages the day-to-day activity of the IETF, setting the standards and best practices for how to develop protocols. It receives appeals of the decisions of the working groups and makes the decision to progress documents in the standards track. As Grant explained, many of its members are currently employed for major corporations such as Google, Nokia, Cisco, Mozilla. Though they serve as individuals, this issues a conflict of interests and mines independence and autonomy. The founder of Radical Networks is pessimistic about the capability of for-profit companies to be trusted on these aspects.

The second plane is the user plane, where we find the users’ experience and the interface. Here two aspects come into play: the UX design (user experience design), and the UI (user interface design). UX is the presumed interaction model which defines the process a person will experience when using a product or a website, while the UI is the actual interface, the buttons, and different fields we see online. UX and UI are supposed to serve the end-user, but it is often not like this. The interface is actually optimized for getting users to act online in ways which are not in their best interest; the internet is full of so-called dark patterns designed to mislead or trick users to do things that they might not want.

These dark patterns are part of the weaponised design dominating the web, which wilfully allows for harm of users and is implemented by designers who are not aware of or concerned about the politics of digital infrastructure, often considering their work to be apolitical and just technical. In this sense, they think they can keep design for designers only, shutting out all the other components that constitute society and this is itself a political choice. Moreover, when we consider the relation between technology and aspects like privacy, self-determination, and freedom of expression we need to think of the international human rights framework, which was built to ensure that – as society changes – the fundamental dignity of individuals remain essential. In time, the framework has demonstrated to be plastically adaptable to changing external events and we are now asked to apply the existing standards to address the technological challenges that confront us. However, it is up to individual developers to decide how to implement protocols and software, for example, considering human rights standards by design, and such choices have a political connotation.

The third level is the access plane which is what controls how users actually get online. Here, Grant used Project loon as an example to describe the importance of owning the infrastructure. Project loon by Google is an activity of the Loon LLC, an Alphabet subsidiary working on providing Internet access to rural and remote areas, bringing connectivity and coverage after natural disasters with internet-beaming balloons. As the panellist explained, it is an altruistic gesture for vulnerable populations, but companies like Google and Facebook respond to the logic of profit and we know that controlling the connectivity of large groups of populations provide power and opportunities to make a profit. Corporations with data and profilisation at the core of their business models have come to dominate the markets; many see with suspicion the desire of big companies to provide Internet to those four billions of people that at the moment are not online.

As Catlow warned, we are running the risk that the Internet becomes equal to Facebook and Google. Whilst we need communities able to develop new skills and build infrastructures that are autonomous, like the wireless mesh networks that are designed so that small devices called ‘nodes’ – commonly placed on top of buildings or in windows – can send and receive data and a WIFI signal to one another without an Internet connection. The largest and oldest wireless mesh network is the Athens Wireless Metropolitan Network, or A.W.M.N., in Greece, but we also have other successful examples in Barcelona (Guifi.net) and Berlin (Freifunk Berlin). The goal is not just counterbalancing superpowers of telecommunications and corporations, but building consciousness, participation, and tools of resistance too.

The Activation conference gathered in the Berliner Künstlerhaus Bethanien, the community around the Disruption Network Lab, to share collective approaches and tools for activating social, political, and cultural change. It was a moment to meet collectives and individuals working on alternative ways of intervening in the social dynamics and discover ways to connect networks and activities to disrupt systems of control and injustice. Curated by Lieke Ploeger and Nada Bakr, this conference developed a shared vision grounded firmly in the belief that by embracing participation and supporting the independent work of open platforms as a tool to foster participation, social, economic, cultural, and environmental transparency, citizens around the world have enormous potential to implement justice and political change, to ensure inclusive, more sustainable and equitable societies, and more opportunities for all. To achieve this, it is necessary to strengthen the many existing initiatives within international networks, enlarging the cooperation of collectives and realities engaged on these challenges, to share experiences and good practices.

Information about the 18th Disruption Network Lab Conference, its speakers, and topics are available online:

https://www.disruptionlab.org/activation

To follow the Disruption Network Lab, sign up for its newsletter and get information about conferences, ongoing research, and latest projects. The next event is planned for March 2020.

The Disruption Network Lab is also on Twitter and Facebook.

All images courtesy of Disruption Network Lab

In thinking about the relationship between science fiction and social justice, a useful starting-point is the novel that many regard as the Ur-source for the genre: Mary Shelley’s Frankenstein (1818). When Shelley’s anti-hero finally encounters his creation, the Creature admonishes Frankenstein for his abdication of responsibility:

I am thy creature, and I will be even mild and docile to my natural lord and king, if thou wilt also perform thy part, the which thou owest me. … I ought to be thy Adam, but I am rather the fallen angel, who thou drivest from joy for no misdeed. … I was benevolent and good; misery made me a fiend. Make me happy, and I shall again be virtuous.

Popularly misunderstood as a cautionary warning against playing God (a notion that Shelley only introduced in the preface to the 1831 edition), Frankenstein’s meaning is really captured in this passage. Shelley, influenced by the radical ideas of her parents, William Godwin and Mary Wollstonecraft, makes it clear that the Creature was born good and that his evil was the product only of his mistreatment. Echoing the social contract of Jean-Jacques Rousseau, the Creature insists that he will do good again if Frankenstein, for his part, does the same. Social justice for the unfortunate, the misshapen and the abused is what underlies the radicalism of Shelley’s novel. Frankenstein’s experiments give birth not only to a new species but also to a new concept of social responsibility, in which those with power are behoved to acknowledge, respect and support those without; a relationship that Frankenstein literally runs away from.

The theme of social justice, then, is there at the birth also of the sf genre. It looks backwards to the utopian tradition from Plato and Thomas More to the progressive movements that characterised Shelley’s Romantic age. And it looks forwards to how science fiction – as we would recognise it today – has imagined future and non-terrestial societies with all manner of different social, political and sexual arrangements.

Shelley’s motif of creator and created is one way of examining how modern sf has dramatized competing notions of social justice. Isaac Asimov’s I, Robot (1950) and, even more so, The Caves of Steel (1954) ask not only the question, ‘can a robot pass for human?’, but also more importantly, ‘what happens to humanity when robots supersede them?’. Within current anxieties surrounding AI, Asimov’s stories are experiencing a revival of interest. One possible solution to the latter question is the policing of the boundaries between human and machine. This grey area is explored through use of the Voigt-Kampff Test, which measures the subject’s empathetic understanding, in Philip K. Dick’s Do Androids Dream of Electric Sheep? (1968) and memorably dramatized in Ridley Scott’s film version, Blade Runner (1982). In William Gibson’s novel, Neuromancer (1984), and the Japanese anime Ghost in the Shell (1995), branches of both the military and the police are marshalled to prevent AIs gaining the equivalent of human consciousness.

Running parallel with Asimov’s robot stories, Cordwainer Smith published the tales that comprised ‘the Instrumentality of Mankind’, collected posthumously as The Rediscovery of Man (1993). A key element involves the Underpeople, genetically modified animals who serve the needs of their seemingly godlike masters, and whose journey towards emancipation is conveyed through the stories. It is surely no coincidence that both Asimov and Smith were writing against the backdrop of the Civil Rights Movement, but it is also indicative of the magazine culture of the period that both had to write allegorically. In N.K. Jemisin’s Broken Earth trilogy (2015-7), an unprecedented winner of three successive Hugo Awards, the racial subtext to the struggle between ‘normals’ and post-humans is made explicit.

Jemisin, like Ann Leckie’s multiple award-winning Ancillary Justice (2013), is indebted to the black and female authors who came before her. In particular, the influence of Octavia Butler, as indicated by the anthology of new writing, Octavia’s Brood (2015), has grown immeasurably since her premature death in 2006. Butler’s abiding preoccupation was with the compromises that the powerless would have to make with the powerful simply in order to survive. Her final sf novels, Parable of the Talents (1993) and Parable of the Sower (1998), tentatively posit a more utopian vision. This hard-won prospect owes something to both Joanna Russ’s no-nonsense ideal of Whileaway in The Female Man (1975) as well as the ‘ambiguous utopia’ of Ursula Le Guin’s The Dispossessed (1974). Leckie, in particular, has acknowledged her debt to Le Guin, but whilst most attention has been paid to the representation of non-binary sexualities in both the Ancillary novels and Le Guin’s The Left Hand of Darkness (1969), what binds both authors is their anarchic sense of individualism and communitarianism.

Whilst sf has, like many of its recent award-winning recipients, diversified over the decades, there is little sense of it having abandoned the Creature’s plaintive plea in Frankenstein: ‘I am malicious because I am miserable.’ It is the imaginative reiteration of this plea that makes sf into a viable form for speculating upon the future bases of citizenship and social justice.