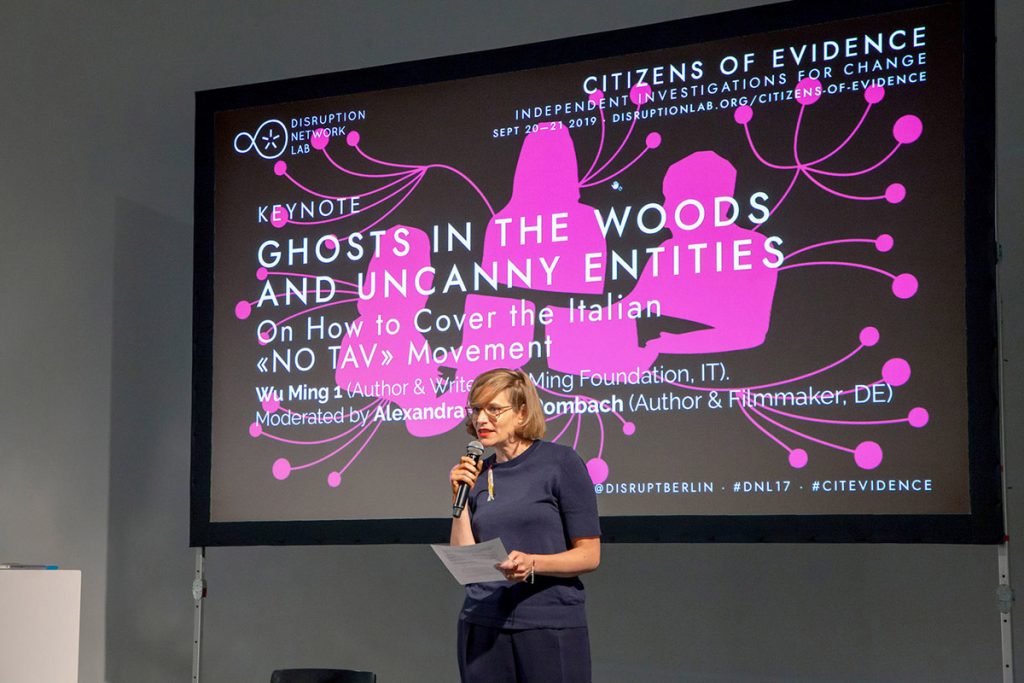

On the 20th of September, Tatiana Bazzichelli and Lieke Ploeger opened the 17th conference of the Disruption Network Lab with CITIZENS OF EVIDENCE to explore the investigative impact of grassroots communities and citizens engaged to expose injustice, corruption, and power asymmetries.

Citizen investigations use publicly available data and sources to autonomously verify facts. More and more often ordinary people and journalists work together to provide a counter-narrative to the deliberate disinformation spread by news outlets of political influence, corporations, and dark money think-tanks. However, journalists and citizens reporting on matters in the public interest are targeted because of the role they play in ensuring an informed society. The work of independent investigation is often delegitimised by public authorities and denigrated in a wave of generalisations against ‘the elites’ and media objectivity, actually designed to undermine independent information and stifle criticism. It appears to be a global process that aims at blurring progressively the boundary between what is fake and what is real, growing to such a level that traditional mainstream media and governments seem incapable of protecting society from a tide of disinformation.

An increasingly Orwellian campaign for the purpose discredit upon them has been built for years against citizens and activists opposing the project of a controversial high-speed rail line for freight trains between Italy and France, which is considered useless and harmful. The Disruption Network Lab conference opened with the keynote GHOSTS IN THE WOODS AND UNCANNY ENTITIES: On How to Cover the Italian «NO TAV» Movement by Wu Ming 1, who spent three years among the people of the Susa Valley opposing this mega-infrastructural project.

As the moderator, author, and filmmaker Alexandra Weltz-Rombach explained, Wu Ming is a pseudonym for a group of Italian authors formed in Bologna after the experience of the Luther Blissett project. For almost 20 years the literary collective has been writing essays, meta-historical novels, and creative narrative, using often the techniques of investigative journalism. Today it is widely appreciated for its capability to deconstruct and analyse complex aspects of social and political life, challenging long-existing paradigms and traditions and synthesizing the views of different minds, to build an alternative narration on facts, inspiring unconventional critical process. Wu Ming 1 explored the Susa Valley and the woods occupied by police and wire fences, experiencing the struggle of a community in its territories, to write a history-as-novel take on the most enduring and radical environmental protest in contemporary Italy, known as No-TAV (TAV stands for Treno Alta Velocità – High Speed Train). To do so, he walked, mapped the territory, and ‘evoked ghosts’. The history of a country can be described by the history of its borders and the Susa Valley is a borderland in the mountains. Probably where Hannibal walked with his army to cross the Alps, since the early 90s it has been projected another huge tunnel inside the mountains, in a long-standing tradition of railroad-tunnels built sacrificing lives and health.

To understand the No-TAV struggle we can go back in time. To when the TAV-railway was first projected, and contextually the opposition of local communities started. But also, back in time to all the conflicts that have been fought on these mountains, which are “full of ghosts” as the author said. Wu Ming 1 explained that in literature and popular tradition, a ghost appears when there is an unresolved story, a wasted life that ended badly. Borderlands are the places where the most of ghosts are to be found. In the Susa Valley, ghosts are suppressed memories of wars and of social conflicts that shaped the territory.

Wu Ming published several works on environmental and climatic issues and wrote a lot about mountains too. Almost 78% of Italian territory is covered by mountains or hills. Their iconic representation has been at time twisted by nationalism, militarism and machismo. The Alps were “sacred borders of the fatherland” – nature to conquer, a symbol of virility and power in fascist propaganda. Today those mountains are an obstacle to economic growth; a growth that might put at risk the whole Susa Valley. Thus, instead of tackling legitimate concerns, project Stakeholders have been seeking for 20 years to delegitimize those leveling the charges against the high-speed railway, despite the masses of evidence to support their claims, using intimidation and violence against them. But the no-TAV collectives’ claims have always been proven to be right, and the project has been declining in size over time. However, the fight within the Valley is still on and the TAV-project is far from being archived.

The panel on the first day, EXPOSING ABUSES: Citizens Recording Human Rights Violations from the US to The Gambia, introduced by Michael Hornsby of Transparency International, opened with a presentation by Melissa Segura, journalist of BuzzFeed News from the US. She documented allegations proving that the Chicago police officer Reynaldo Guevara had framed dozens of innocent people for murder. The reporter put a light on forgotten judiciary cases, giving voice to families and communities affected by injustices, hit by a profound brokenness that she experienced herself when her nephew was framed and arrested years before.

A group of black and Latino mothers, aunts, and sisters knew that their beloved were innocent, but no officials wanted to take up their cause. Segura met these women after they had been fighting for decades in search of justice. They began when the journalist was at her elementary school: “at the time they had already gathered in a team, collecting data and writing spreadsheets on Lotus” she recalled. They had no chance to be heard, no PR, no lobby, no support from media were available to them. Segura realized soon that the story she had to cover wasn´t just the conviction of a 19-year-old-boy sentenced in 1999 with 110 years of prison for a murder he did not commit. It was also about the community of women that were fighting for justice, it was about their lives.

She learnt soon that her sources were able to cover their own stories much better than how she could, showing her new paths to the truth. The journalist dedicated time to building a trusting relationship with them, giving full reassurance that their story would be fairly reported. After an intense three-year investigation, she succeeded in wearing down a key witness to testify, cracking the wall of impunity. This process, she said, “did not expose the harm of people, but tried to connect to it.”

Reynaldo Guevara has been beating up people, framing them, extorting false confessions and false witnesses for years. Since publishing Segura’s articles, seven innocent men have been freed, and dozens more convictions are under review.

In the context of the major movements that draw attention on issues such as injustice and police violence targeting specific communities and minorities in the US, policy and data analyst Samuel Sinyangwe decided to join the work of justice activist groups formed after the 2014 police shooting of Michael Brown in Ferguson, Missouri. He is now part of the Police Scorecard project, and of the Campaign Zero independent platform he co-founded, designed to facilitate and guarantee the collection of data on these violations. Sinyangwe explained that, as of today, the US government has implemented neither collections of data on police misconducts, violence and killings, nor public database of disciplined police officers. In his view, US law enforcement agencies have failed to provide even basic information about the lives they have taken, in a country where at least three people are killed by police every day and black people are 3 times more probable to end up victims of brutal use of force by the police.

The independent observatory built by Sinyangwe seems to be quite effective. It is described as the most comprehensive accounting of people killed by police since 2013 in the US. A report from the US Bureau of Justice Statistics estimated that approximately 1,200 people were killed by police between June 2015 and May 2016. The database identified 1.179 people killed by police over this same time period. These estimates suggest that it was able to capture 98% of the total number of police killings that occurred. Sinyangwe hopes these data will be used to provide greater transparency and accountability for police departments as part of the ongoing campaign to end police violence in black and Latino communities, leading to a change of policies.

With data able to map the situation in the US, it has also been possible to make comparisons and drew analyses. The Campaign Zero researches show that there is a whole false narrative about criminality rates, based on numbers that just mirror a system based on different federal policies regarding police forces, and that levels of violent crime in US cities do not determine rates of police violence.

According to data, cities with the same density of population have very different rates of violence, and very different rules regulating the activity of police agents. Starting from this, Sinyangwe and his team decided to look for different policy documents from different police department. These policies determine how and when a local policeman is authorized to use force. With a closer look, the Campaign Zero team could easily determine that there is no federal standard. Some documents live a grey area, others discourage the use of force, and particularly of deadly force, limiting it to the most dangerous scenarios after all lesser means of use of force have failed. Some seem to openly encourage it instead.

The group listed eight types of restrictions in the use of force to be found in these policies, consisting of escalators that aim at excluding, as far as possible, the use of violence. Comparisons show that a combination of these restrictions, when put in place, can produce a large reduction in police violence. Policies combining restrictions predicted indeed significantly lower rate of deathly force.

Data about unarmed people killed by police in major American cities show that black people are three times more likely to be killed by police than white people (2013-2018). Movements such as Black Lives Matter started also because of this. Another problem is that it is extremely difficult to hold US police members accountable.

Sinyangwe underlined how it is necessary to research the components that predict police violence, and that can help hold officers accountable, to be sure that they are enforced by police departments.

Police union contracts – for example – can be considered an obstacle on the way to accountability and transparency. It is extremely rare to have a policeman convicted for a crime in the US. It is a systematic fact and it cannot be reduced just to the individuals, who are acting using brutal and deathful force. It is a matter of lack of training, lack of policies enhancing non-violent solutions, but there is also legislation that protects policemen from legal consequences. It is not easy even to sue a US policeman, as they are shielded by qualified immunity and often by confidential police records, limiting how officers are investigated and disciplined. As of today, this makes impossible to identify and punish misbehaviours, abuses, and responsibilities in most cases. According to Mapping Police Violence, a research collaborative collecting comprehensive data on police killings to quantify the impact of US police violence in communities that Sinyangwe set up, 99% of cases in 2015 have not resulted in any officer involved being convicted for a crime.

The Campaign Zero platform is designed to be a tool able to enhance participation, foster accountability and transparency. It is an instrument to prevent killings and it calls for the adoption of a comprehensive package of urgent policy solutions – informed by data, research and human rights principles – that can change the way police serve communities.

The last panellist of the day was the participatory video facilitator from the UK, Gareth Benest, who presented the “Giving Voice to Victims of Grand Corruption in The Gambia” participatory video project. It is an initiative implemented on behalf of Transparency International in reaction to “The Great Gambia Heist” investigations by OCCRP (Organized Crime and Corruption Reporting Project) revealed in March 2019, which allowed those affected by grand corruption to share their stories and present their truths in carefully edited video messages, and to give voice to those Gambians who are deprived from access to basic health, education, agriculture, and portable drinking water.

In Gambia, a truth and reconciliation commission has begun to investigate rights abuses during the 22-year-long dictatorship of Yahya Jammeh ended in 2017. OCCRP has exposed for the first time how the corrupted dictator and his associates plundered nearly 1 billion US$ of timber resources and Gambia’s public funds. Thousands of documents dated between 2011 and 2016, including government correspondence, contracts, and legal documents, bank records, internal investigations able to define in detail the level of corruption and impunity of the Gambian system.

After the end of Jammeh’s rule, authorities have declared they will shed light on corruption, extrajudicial killings, torture, and other human rights violations. It is an important process of reconciliation, but still the voices of the marginalized and rural citizens are not heard. ‘Giving Voice to Victims of Grand Corruption in The Gambia’ was meant to facilitate a process with Gambian community members to express their perspectives on local problems and ideas, translating them into a film.

Benest explained how such a project is supposed to enable these communities to focus on the issues they are affected by and move towards changing their circumstances.

The participatory video is a technique that has been used to fight injustice in different contexts for many years. Benest recalled recent projects involving a community displaced by diamond-mining, young people excluded from poverty eradication strategies, widows made landless by customary leaders, and island residents threatened with forced evictions by land grabbers. In his work, the facilitator encourages equal participation and rotation of rules within the team. Participants control every aspect of the video making, from the process to the final result. Self-directed and self-organised videos become a communication tool that allows participant to build a dialogue for positive change.

The second day of the Disruption Network Lab conference opened with the keynote speech of Matthew Caruana Galizia, WHAT INDEPENDENT INVESTIGATORS DON’T USUALLY DISCLOSE, in which he addressed issues freelancers investigating high-level corruption face in silence and isolation, often with tragic consequences. The journalist, in conversation with Crina Boros, talked about the background of his mother, the Maltese reporter and blogger Daphne Caruana Galizia, who was killed on the 16th of October 2017, outlining the risks and the outcomes of her dangerous and brave work.

Her murder had been planned in detail for a long time. Killers were arrested, but the mandators haven’t been identified yet, and the criminal investigation is not moving forward. Daphne Galizia’s family is pushing the issue internationally and within Malta, knowing that without doing something this case would just disappear from news headlines without solution. Anti-corruption investigative journalists are arrested, threatened, and killed everywhere. People just vanish, and no justice is done.

For the 15 years before her death, Daphne Caruana Galizia had been appearing in 65 court cases filed against her. Her bank account was frozen; she was a victim of media campaigns against her; and she was sued by politicians, businessmen, and other journalists too. Her son recalled when he was nine that their dog was found slaughtered, then the front door of their house was burnt down. Later on, one of their dogs was shot, and another poisoned. Threats and violence continued until their whole house was set on fire. No investigation was ever effectively put in place to find out the perpetrators of these crimes, though the journalist and her family had always pressed charges against unknown.

It is hard to be confronted with the pain and memories of personal events on a stage in front of an audience, but the issue of justice is too urgent. Even if talking about her gets more and more difficult every time, Matthew is travelling the world to keep fighting and demand justice for his mother.

Matthew had spent the last years working with his mother. The International corruption revealed by the Panama Papers – on which they were investigating – was not cause of resignations and public assumption of responsibility in Malta. Involved politicians and news outlets attacked with all available means independent journalists covering the cases. The pressure on Daphne intensified in such a way, that she was sued 30 times just in the last year before her death. In those moments she kept repeating to his son Matthew that, no matter how hopeless the situation, there is an urgency to strive to make corruption and responsibilities publicly known. The Maltese blogger was not naive, she was well aware that there was the risk of getting killed, as it happened to Anna Stepanowna Politkowskaja and many colleagues all over the world. But she did not give in.

Reviewing his mother’s life, Matthew mentioned a further aspect to consider: Daphne had to use much of her time and money to defend herself inside trials against her, which were long and very expensive. She had passion and abilities. She was so talented that she could publish a magazine about food, architecture, and design – on which she spent just a couple of days a month – to earn money enough to carry on with her independent investigation work, and pay for her legal defence.

When there is a whole system against you, you need very good lawyers, you need expertise, you need money to pay for it. The Maltese blogger spent a whole career overcoming the obstacles of a corrupted system and she self-sustained economically, making sacrifices. Although all this, still, his son Matthew and her family are convinced that the solution must come through the judicial way, using available legal instruments, and making pressure on EU institutions at the highest levels. That is why Matthew Caruana Galizia asks everybody for commitment in a demand of radical change. Malta is part of the European Union, as he keeps on repeating.

Someone has been trying to silence Daphne for years before her murder. They must have gotten to the conclusion that the only way to shut her up was an assassination, for the purpose to cancel her stories with her, as her son Matthew sadly commented. To avoid this happening, several newspapers and investigative organisations joined the «Daphne Project» a global consortium of 18 international media including Reuters, The Guardian, and Le Monde, to continue the work of the Maltese journalist. They are led by the group Forbidden Stories, whose mission is to continue the work of silenced journalists. They stand together because they think that even if you kill a journalist like Caruana Galizia, her investigations cannot be buried with her. Thanks to the Daphne Project, and the courage and determination of Daphne Caruana Galizia’s family, her investigation lives on.

Matthew stressed the fact that it’s not about the future of one politician, or of a specific criminal group. It is about the future of Malta and the EU. Journalists who defend democracy are alone when they face the repercussions of what they do. It is necessary to make sure that when there are outcomes due to effective journalism, a society trained to react and self-organise can pick up the investigative work, defend independent investigation, and ask for political accountability within a public discussion. In Malta, nothing of this ever happened, and Daphne became more isolated.

Grassroot citizens organisations are fundamental to boost activism inside local communities and demand for justice. In cases like Daphne’s, no one is going to do it if not organised citizens, together with independent journalists and organisations. Many killed journalists had neither a family nor an organisation that could fight on for them. Maltese Police seem to have never developed professional skills to effectively work on this kind of criminal cases, and the few results from recent years were from the FBI in the USA. Criminals within a system that guarantees impunity can easily develop better skills.

Moreover, in Malta, investigations have a very poor rate of success and in Daphne’s case, we just know how she was murdered. But the political atmosphere, in which this murder matured, has been untouched for these last two years, and the journalist’s family is worried the official inquiry that just started in the country is neither independent nor impartial. Members of the Board of Inquiry, they claim, have conflicts of interests at different levels, either because they were part of previous investigations or because they have ties to subjects who may be investigated now.

In the last panel of the conference, as Bazzichelli explained, the discussion focused on the connection between grassroots investigations and data analysis, and how it is possible to make sensitive data accessible without restriction and open them to the public, facilitating the publication of large datasets.

M C McGrath and Brennan Novak, introduced by moderator Shannon Cunningham, presented a tool designed to enable the publishing of data in searchable archives and the sorting through large datasets. The group builds free software to collect and analyse open data from a variety of sources. They work with investigative journalists and human rights organisations to turn that into useful, actionable knowledge. Their Transparency Toolkit is accessible to activists and citizen journalists, as well as those who lack resources or technical skills. Until a few years ago only big media organisations with particularly good technical resources could set up such instrumentation. The two IT experts decided to increase the use and the impact of open information, considering participation as a key factor to reduce the difficulties caused by relying only on media outlets or single journalists to cover complex facts or analyse large datasets.

As M C McGrath and Novak explained, Transparency Toolkit uses open data “to watch the watchers” and to hold powerful individuals and groups accountable. At the moment, their primary focus is investigating surveillance and human rights abuses, like in the case of the Hacking Team leaks in July 2015.

Hacking Team is an Italian company specialising in surveillance software and in very effective Trojans able to slip into computers and smartphones, allowing a secret and total surveillance. Four years ago, 400 GB of their data was anonymously published online, showing how the IT company had been working for authoritarian governments with questionable human rights records, to ensure they can use such software to spy on activists, journalists, and political opponents, in countries like Morocco, Dubai, Ethiopia, Mexico and Sudan. Transparency Toolkit mirrored the full Hacking Team dataset to make it more available to journalists and security researchers investigating these issues. It released a searchable archive of 200GB of emails categorized by companies, countries, events, and other subjects discussed.

Other important projects from the Transparency Toolkit team are the Surveillance Industry Index (SII) developed together with Privacy International (a searchable archive featuring over 1500 brochures about surveillance technology, data on over 520 surveillance companies, and nearly 500 reported exports of surveillance technologies), the Snowden Document Search (the first comprehensive database of Snowden documents initiated which aims to preserve its historical impact), and ICWATCH – a platform born to collect and analyse resumes of people working in the intelligence community, contractors, the military, and intelligence. These resumes are useful for uncovering new surveillance programs, learning more about known codewords, identifying which companies help with which surveillance programs, examining trends in the intelligence community, and more. ICWATCH provides a collection of over 100,000 of these resumes from LinkedIn, Indeed, and other public sources, and now searchable with a search engine called LookingGlass.

The last part of this panel was than dedicated to the Dictator Alert project, a website that tracks the planes of authoritarian regimes all over the world. Available networks censor the information about planes of intelligence, military, authorities, and heads of state. This project, run by Emmanuel Freudenthal and François Pilet with support from OCCRP, began as an open-source computer program to identify planes belonging to dictators flying over Geneva. The program mined data from a network of antennas used by plane spotters and shared its alerts via Twitter. Today, Dictator Alert uses data from ADSB-Exchange, as well as several antennas installed by the team of researchers themselves. The details of each plane captured by the antennas are compared with a list of aircrafts registered or regularly used by authoritarian regimes. When a match is found, a message is published on the website.

Freudenthal presented the methodology of acquiring information behind Dictator Alert. Some people in the audience disagreed with the panellists, arguing that a reductive definition for ‘dictator’ might questionably influence the outcomes of the project, considering that some elected leaders from countries listed as democracies are also responsible for crimes, secrets, and human rights violations. The investigative journalist responded by explaining that Dictator Alert is orientated using the Democracy Index published by The Economist. The Index appeared first in 2006, categorising countries as full democracies, flawed democracies, hybrid regimes, and authoritarian regimes, based on 60 indicators grouped in different categories, measuring pluralism, civil liberties, and political culture.

The Disruption Network Lab organised the workshop Berlin’s Sky, An Afternoon Investigation on the day following the 17th Conference. Participants gathered in the former Berliner airport Tempelhofer Feld to conduct guided research using antennas and laptops to track the sky and spot anomalies above the city.

The conference closed with the investigation by Forensic Architecture – Horizontal Verification and the Socialised Production of Evidence. Team member Robert Trafford presented the organisation founded to investigate human rights violations using a range of techniques, flanking classical investigation methods including open-source investigation video analysis, spatial and architectural practice, and digital modelling. They work with and on behalf of communities who have been affected by state and military violence, producing evidence for legal forums, human rights organisations, investigative reporters and media, as well as for arts and cultural institutions.

In Trafford´s analyses, conflict, violence, and human rights violations have become heavily mediatised and because of the “open source revolution” and smartphones, facts are often documented and relayed to the world by fragments of video material. Media sometimes report about these facts in ways which seem to make them less clear, instead of allowing better understanding. Forensic Architecture is in part a set of technical and theoretical tools for unpacking those mediatised facts, to access the truth which often exists behind and between the fragments of files that are released or leaked, to prove human rights violations. It relies on the prevalence of open source video material and tries to put an order in the fake-news and post-truth communication, offering a new model for collectively and collaboratively constructing truths. Trafford pointed out how people today, who seem to be widely rejecting the idea of institutions that they might previously have trusted to assemble facts and information, are still able to accomplish this delicate task. Often truth seems to be created elsewhere, possibly behind a wall of closed sources. The Internet and the consequent open-source revolution exploded the stability of that classic system of information, and those institutions are no longer providing truths around which people are willing or able to orient themselves. For better or worse, the vertical has been supplanted by the horizontal, Trafford said.

As those institutions falter, there is a certain breed of political actors – largely from populist and far-right parties – that have been gaining mediatic and institutional power all over the world for the last five years, encouraging the public to believe that our societies are soaked in misinformation, and that there is no possibility of reaching out and acquiring reliable facts we can all agree on and orient ourselves with.

More and more often, online and offline, we read of individuals saying that we should not trust traditional news outlets or institutions that encourage us to believe that they can guarantee independent and free information. It is under this cover of equivocation and uncertainties that the human rights violations of the 21st century are being carried out and subsequently concealed.

Forensic Architecture’s challenge is to expose this misrepresentation of things, and to offer a kind of counter truth to official versions of relevant facts. Its researchers collect little grains, clues they find inside videos, pictures, and articles that try to organise in certain ways, reassembling them into an independent analysis. By using different perspectives into ongoing practice in which the development of facts and evidence is socialized, the project encourages open and horizontal verification.

The moderator of this last session of the conference, Laurie Treffers, mentioned the idea of counter forensics. By integrating and working across different forms of knowledge, and across different institutions and disciplines – which may at times appear like they have nothing in common or that they speak in entirely different registers – horizontal verification is about unifying those for reasons of mutual protection, mutual security, and mutual reinforcement.

Trafford gave examples of Forensic Architecture’s work, such as working closely with the Gaza-based Al Mezan Center for Human Rights, the Tel Aviv-based Gisha Legal Center for Freedom of Movement, and the Adalah Legal Center for Arab Minority Rights in Haifa, when they examined the environmental and legal implications of the Israeli practice of aerial spraying of herbicides along the Gaza border.

To this end, the investigation sought to define if and how airborne herbicides travel into Gaza; how far into its territories they entered; what concentration of herbicide and what damage to the farmland on the Gazan side of the border can be calculated. The analysis of several first-hand videos, collected in the field, revealed that aerial spraying by commercial crop-dusters flying on the Israeli side of the border generally mobilises the wind to carry the chemicals into the Gaza Strip at damaging concentrations. This is a constant primary effect.

The videos used for the investigations supported the testimonies of farmers that, prior to spraying, the Israeli military uses the smoke from a burning tire to confirm the westerly direction of the wind, thereby carrying the herbicides from Israel into Gaza.

Forensic Architecture modelled the Israeli flight paths and geo-located them, compared metadata and video material, and engaged fluid dynamics experts from the University of London to look at what the potential distribution of those chemicals would be from the heights that the plane was flying, in the wind conditions that could be calculated. The investigation proves that each spray leaves behind a unique destructive signature.

“Along with the regular bulldozing and flattening of residential and farmland, aerial herbicide spraying is one part of a slow process of ‘desertification’, that has transformed a once lush and agriculturally active border zone into parched ground, cleared of vegetation,” Trafford said.

Analyses of the evidence derived from vegetation on the ground, civilian testimony, and the environmental elements mobilized in the spraying event showed that the Israeli practice of aerial fumigation at times when the wind is blowing into Gaza causes damage to farmland hundreds of meters inside the fields.

Once again, the Disruption Network Lab created a forum for discussion, to define the role of citizens in making a change in the information sphere, highlighting local and international stories, tools, and tactics for social change built on courageous grassroots reporting and investigations. The Disruption Network Lab invited guests to challenge laws that effectively criminalise journalism and whistleblowing. The conference went beyond the usual dichotomy between journalists and activists, official media and independent media, and opened up a dialogue among different expertise to discuss and present opportunities of collaboration to report misinformation, corruption, abuse, power asymmetries, and injustice.

CITIZENS OF EVIDENCE presented experts working on anti-corruption, investigative journalism, data policy, political activism, open source intelligence, video storytelling, whistleblowing, and truth-telling, who shared community-based stories to increase awareness on sensitive subjects. Bottom-up approaches and methods that include the community in the development of solutions appear to be fundamental. Projects that capacitate collectives, minorities, and marginalized communities, to develop and exploit tools to systematic combat inequalities, injustices, and impunity are to be enhanced.

Moreover, on the 29th of October, the CPJ published the 2019 Global Impunity Index, putting a spotlight on countries where journalists are slain and their killers go free. During the 10-year index period, 318 journalists were murdered for their work worldwide and no perpetrators have been successfully prosecuted in 86% of those cases. Last year, CPJ recorded complete impunity in 85% of cases. Historically, this number has been closer to 90%. All participants at the conference expressed their concern about this situation.

It is important to doubt and require a double-check over relevant news, as governments and private corporations have proved too often, that they prefer secret and manipulation to transparency and accountability. It is also important to verify constantly if media outlets, or a single journalist, are actually independent. But this shall not be used to weaken independent information and undermine the principles of particular constitutional importance regarded as ‘higher law’ on which it is based. Journalists and citizen reporters are already alone in their work.

CITIZENS OF EVIDENCE was curated by Tatiana Bazzichelli, developed in cooperation with Transparency International. It was the third in Disruption Network Lab’s 2019 series ‘The Art of Exposing Injustice’. Videos of the conference are also available on YouTube. For details of speakers and topics, please visit the event page here: https://www.disruptionlab.org/citizens-of-evidence.

To follow the Disruption Network Lab, sign up for its newsletter with information on conferences, ongoing researches, and projects. You may also find the organisation on Twitter and Facebook.

The next Disruption Network Lab event ‘ACTIVATION – COLLECTIVE STRATEGIES TO EXPOSE INJUSTICE’ is planned for November 30th, in Kunstquartier Bethanien Berlin. More info here: https://www.disruptionlab.org/activation

Image Credit:

Elena Veronese for Disruption Network Lab

Featured Image:

Graphic courtesy of Disruption Network Lab

Michel Bauwens is one of the foremost thinkers on the peer-to-peer phenomenon. Belgian-born and currently resident in Chiang-Mai, Thailand, he is founder of the Foundation for P2P Alternatives.

It’s a commonplace now that the peer-to-peer movement opens up new ways of creating relating to others. But you’ve explored the implications of P2P in depth, in particular its social and political dimensions. If I understand right, for you the phenomenon represents a new condition of capitalism, and I’m interested in how that new condition impacts on the development of culture – in art and also architecture and urban form.

As a bit of a background, I’d like to look at what you’ve identified as the simultaneous “immanence” and “transcendence” of P2P: it’s interdependent with capital, but also opposed to it through the basic notion of the Commons. Could you elaborate on this?

With immanence, I mean that peer production is currently co-existing within capitalism and is used and beneficial to capital. Contemporary capitalism could not exist without the input of free social cooperation, and creates a surplus of value that capital can monetize and use in its accumulation processes. This is very similar to coloni, early serfdom, being used by the slave-based Roman Empire and elite, and capitalism used by feudal forces to strengthen their own system.

BUT, equally important is that peer production also has within itself elements that are anti-, non- and post-capitalist. Peer production is based on the abundance logic of digital reproduction, and what is abundant lies outside the market mechanism. It is based on free contributions that lie outside of the labour-capital relationship. It creates a commons that is outside commodification and is based on sharing practices that contradict the neoliberal and neoclassical view of human anthropology. Peer production creates use value directly, which can only be partially monetized in its periphery, contradicting the basic mechanism of capitalism, which is production for exchange value.

So, just as serfdom and capitalism before it, it is a new hyperproductive modality of value creation that has the potential of breaking through the limits of capitalism, and can be the seed form of a new civilisational order.

In fact, it is my thesis that it is precisely because it is necessary for the survival of capitalism, that this new modality will be strengthened, giving it the opportunity to move from emergence to parity level, and eventually lead to a phase transition. So, the Commons can be part of a capitalist world order, but it can also be the core of a new political economy, to which market processes are subsumed.

And how do you see this condition – the relationship to capital – coming to a head?

I have a certain idea about the timing of the potential transition. Today, we are clearly at the point of emergence, but also coinciding with a systemic crisis of capitalism and the end of a Kondratieff wave.

There are two possible scenarios in my mind. The first is that capital successfully integrates the main innovations of peer production on its own terms, and makes it the basis of a new wave of growth, say of a green capitalist wave. This would require a successful transition away from neoliberalism, the existence of a strong social movement which can push a new social contract, and an enlightened leadership which can reconfigure capitalism on this new basis. This is what I call the high road. However, given the serious ecological and resource crises, this can at the most last 2-3 decades. At this stage, we will have both a new crisis of capitalism, but also a much stronger social structure oriented around peer production, which will have reached what I call parity level, and can hence be the basis of a potential phase transition.

The other scenario is that the systemic crisis points such as peak oil, resource depletion and climate change are simply too overwhelming, and we get stagnation and regression of the global system. In this scenario, peer-to-peer becomes the method of choice of sustainable local communities and regions, and we have a very long period of transition, akin to the transition at the end of the Roman Empire until the consolidation of feudalism during the first European revolution of 975. This is what I call the low road to peer to peer, because it is much more painful and combines both progress towards p2p modalities but also an accelerating collapse of existing social logics.

That’s a less optimistic scenario… what form of conflict would this involve?

The leading conflict is no longer just between capital and labour over the social surplus, but also between the relatively autonomous peer producing communities and the capital-driven entrepreneurial coalitions that monetize the commons. This has a micro-dimension, but also a macro-dimension in the political struggles between the state, the private sector and civil society.

I see different steps of political maturation of this new sphere of peer power. First, attempts to create networks of sympathetic politicians and policy-makers; then, new types of social and political movements that take up the Commons as their central political issue, and aim for reforms that favour the autonomy of civil society; finally, a transformation of the state towards what I call a Partner State which coincides with a fundamental re-orientation of the political economy and civilization. You will notice that this pretty much coincides with the presumed phases of emergence, parity and phase transition.

Most likely, acute conflict may arise around resource depletion and the protection of these resources through commons-related mechanisms. Survival issues will dictate the fight for the protection of existing commons and the creation of new ones.

You often cite Marx, who of course also wrote at a time of conflict and social change provoked by technological and economic development. Does this tension you’re describing fit in his notion of contradictory forces conflicting – thesis, antithesis, synthesis – in other words, is this a historical materialist process?

I don’t quite use the same language, because I use Marx along with many other sources. I never use Marx exclusively or ideologically, but as part of a panoply of thinkers that can enlighten our understanding. My method is not dialectical but integrative, i.e. I strive to integrate both individual-collective aspects and objective-subjective aspects, and to avoid any reductionist and deterministic interpretations. Though I grant much importance to technological affordances, I do not adhere to technological determinism, and I don’t find that I pay much attention to historical materialism, since I see a feedback loop between culture, human intentionality, and the material basis. Technology has to be imagined before it can be invented.

My optimism is grounded in the hyperproductivity of the new modes of value creation, and on the hope that social movements will emerge to defend and expand them. If that fails to happen, then the current unsustainable infinite growth system will wreak great havoc on the biosphere and humanity.

As you say classical or Marxist economics don’t really suffice to describe the current situation. Is one aspect of this problem that the classical distinction between use and exchange doesn’t fit with a situation in which many of the “uses” are ludic, and have an exchange system built into them? I’m thinking of on-line gaming specifically. But it has always been difficult to place art in this simple use/exchange polarity. Do you see any revisions to that polarity today?

I’m not sure the ludic aspect is crucial, as use value is agnostic to the specific kind of use, just as peer production is agnostic as to the motivation of the contributors. However, our exponential ability to create use value without intervention of the commodity form, with only a linear expansion of the monetization of peer platforms, does create a double crisis of value. On the one hand, capital is valuing the surplus of social value through financial mechanisms, and is not restituting that value to labour, just as proprietary platforms do not pay their value producers; on the other hand, peer producers are producing more and more that can’t be monetized. So we have financial crisis on the one hand, a crisis of accumulation and a crisis of precarity on the other side. This means that the current form of financial capitalism, because of the broken feedback loop between value creation and realization, is no longer an appropriate format.

Regarding your ‘integrative method’, this is a much more sophisticated take on economics that places it in relationship to other, cultural, dimensions of human life. And the imagination is central to it. Given that, do you see any special role for art in this transition?

Art is a precursor of the new form of capitalism, which you could say is based on the generalization of the ‘art form of production’. Artists have always been precarious, and have largely fallen outside of commodification, relying on other forms of funding, but peer production is a very similar form of creation that is now escaping art and becoming the general modality of value creation.

My take is that commodified art has become too narcissistic and self-referential and divorced from social life. I see a new form of participatory art emerging, in which artists engage with communities and their concerns, and explore issues with their added aesthetic concerns. Artists are ideal trans-disciplinary practitioners, who are, just as peer producers, largely concerned with their ‘object’, rather than predisposed to disciplinary limits. As more and more of us have to become ‘generally creative’, artists also have a crucial role as possible mentors in this process. I was recently invited to attend the Article Biennale in Stavanger, Norway, as well as the artist-led herbologies-foraging network in Finland and the Baltics, and this participatory emergence was very much in evidence, it was heartening to see.

We might see as opposed to that sort of grassroots participatory engagement, the entities you refer to as the “netarchies.” Their power lies in the ownership of the platform they exploit for harvesting user-originating information and activities. How hegemonic is this ownership? At what point does it become impossible to create a “counter-Google”?

The hegemony is relative, and is stronger in the sharing economy, where individuals do not connect through collectives and have weak links to each other. The hegemony is much weaker in the true commons-oriented modalities of production, where communities have access to their own collaborative platforms and for-benefit associations maintaining them.

The key terrain of conflict is around the relative autonomy of the community and commons vis a vis for-profit companies. I am in favour of a preferential choice towards entrepreneurial formats which integrate the value system of the commons, rather than profit-maximisation. I’m very inspired by what David de Ugarte calls phyles, i.e. the creation of businesses by the community, in order to make the commons and their attachment to it viable and sustainable over the long run. So, I hope to see a move from the current flock of community-oriented businesses, towards business-enhanced communities. We need corporate entities that are sustainable from the inside out, not just by external regulation from the state, but from their own internal statutes and linkages to commons-oriented value systems.

Counter-googles are always possible, as platforms are always co-dependent on the user communities. If they violate the social contract in a too extreme way, users can either choose different platforms, or find a commons-oriented group that develops an independent alternative, which in turn maintains the pressure on the corporate platforms. I expect Google to be smart enough to avoid this scenario though.

As you’ve said elsewhere, many of these issues are about a new form of governance. Do you see any of this as particularly urban in character — I mean, about organization at the smaller scale, regionally focused, as opposed to at the level of the nation state. Does propinquity matter at all to this — the importance of living together? This seems to relate to a — not a contradiction or tension exactly, but a complication of the P2P notion — that relationships are dispersed, yet a number of the parallels you draw with historical models (for example the Commons) connect with social situations in which people lived very close together. A fairly strong notion in urbanistic thinking is that propinquity is a good thing. In the past that was part of many artistic relationships also: cities as milieux of artistic production/creativity, artists’ colonies; working cheek by jowl with other creative people and breathing the same air. Is this notion in any sense undermined by dispersed networks?

I think we are seeing the endgame of neoliberal material globalization based on cheap energy, and hence a necessary relocalization of production, but at the same time, we have new possibilities for online affinity-based socialization which is coupled with resulting physical interactions and community building. We have a number of trends which weaken the older forms of socialization. The imagined community of the nation-state is weakening both because of the globalized market; the new possibilities for relocalization that the internet offers, which includes a new lease of life to mostly reactionary and more primary ethnic, regional and religious identities; but also because of this important third factor, i.e. socialization through transnational affinity based networks.

What I see are more local value-creation communities, but who are globally linked. And out of that, may come new forms of business organization, which are substantially more community-oriented. I see no contradiction between global open design collaboration, and local production, both will occur simultaneously, so the relocalized reterritorialisation will be accompanied by global tribes organized in ‘phyles.’ I think the various commons based on shared knowledge, code and design, will be part of these new global knowledge networks, but closely linked to relocalized implementations.

One interesting question is what forms of urbanism come out of p2p thinking. The movement is in the process of thinking this through, in fact a definition of p2p urbanism was just published by the “Peer-to-peer Urbanism Task Force” (http://p2pfoundation.net/Peer-to-Peer_Urbanism).

This promotes, in general terms, bottom-up rather than centrally planned cities; small-scale development that involves local inhabitants and crafts; and a merging of technology with practical experience. All resonant in various ways with p2p approaches. But this statement also provokes a few questions: It calls for an urbanism based on science and function; in fact it explicitly promotes a biological paradigm for design. At the risk of over-categorizing, isn’t this a modernist understanding of design — or if not, how is it different? This document also refers to specific schools of urban design: Christopher Alexander, and also New Urbanism. On the side of socio-economics though, New Urbanism has been criticized (for example in David Harvey’s Spaces of Hope); some see it as nostalgic and in the end directed at a narrow segment of the population. Christopher Alexander’s work on urban form has also been criticized as, being based on consensus, restrictive in its own ways. In fact, might not p2p principals call for creation of spaces that allow dissent and even shearing-off from the mainstream? Might there be a contradiction built into trying to accommodate the desires for consensus and for freedom? Contradiction can be a source of vitality, certainly in art; but it can raise some tensions when you get to built form and a shared public realm.

I cannot speak for the bio- or p2p urbanism movement, which is itself a pluralistic movement, but here’s what I know about this ‘friendly’ movement. I would call p2p urbanism not a modernist but a transmodernist movement. It is a critique of both modernist and postmodern approaches in architecture and urbanism; takes critical stock of the relative successes and failings of the New Urbanist school; and then takes a trans-historical approach, i.e. it critically re-integrates the premodern, which it no longer blankly rejects as modernists would do. I don’t think that makes it a nostalgic movement, but rather it simply recognizes that thousands of years of human culture do have something to teach us, and that even as we ‘progressed’, we also lost valuable knowledge. Finally, I think there is a natural affinity between the prematerial and post-material forms of civilization. The accusation of elitism is I think also unwarranted, given what I know of the work of bio-urbanists amongst slumdwelling communities. However, I take your critique of consensus very seriously, without knowing how they answer that. You are right, that is a big danger to guard for, and one needs to strive for a correct balance between agreed-upon frameworks, that are community and consensus-driven, and the need for individual creativity and dissent. Nevertheless, compared to the modernist prescriptions of functional urbanism, I don’t think that danger should be exaggerated.

Following on this track, I’d like to pose another question that relates to living together. The P2P concept depends on the difficulty of controlling the activity of peers on a network: i.e. it’s impossible to lock down the internet. Doesn’t this degree of freedom also eliminate those social controls that might be considered “healthy” – for example, controls over criminal activity. David Harvey (to bring him in again), in his paper “Social Justice, Postmodernism and the City”, lists social controls among several elements of postmodern social justice. When the grand narratives have been replaced by small narratives, there remains a need to limit some freedoms. How does p2p thinking deal with this?

I think we can summarize the evolution of social control in three great historical movements. In premodern times, people lived mostly in holistic local communities, where everyone could see one another, and social control was very strong. At the same time, vis a vis more far-away institutions, such as for example the monarchy, or the feudal lord, or say in more impersonal communities such as large cities, compliance was often a function of fear of punishment. With modernity, we have a loss of the social control through the local community, but a heightened sense of self through guilt, combined with the fine-grained social control obtained through mass institutions, described for example by Michel Foucault. The civility obtained through the socialization of the imagined community that was the nation state, and the educational and media at its disposal, also contributed to social control and training for civil behaviour.

My feeling about peer-to-peer networks is that they bring a new form of very real socialization through value affinity, and hence, a new form of denser social control in those specific online communities which also usually have face-to-face socialities associated with them. But this depends on whether the community has a real value affinity and a common project, in which case I think social control is ‘high’, because of the contributory meritocracy that determines social standing. On the contrary, in the looser form of sharing communities, say YouTube comments for example, we get the type of social behaviour that comes from anonymity and not really being seen.

So the key challenge is to create real communities and real socialization. Peer to peer infrastructures are often holoptical, i.e. there is a rather complete record of behaviour and contributions over time, and hence, a record of one’s personality and behaviour. This gives a bonus to ‘good ethical behaviour’ and attaches a higher price to ‘evil’. On the other hand, in the looser communities, subject to more indiscriminate swarming dynamics, negative social behaviour is more likely to occur.

A key difference between contemporary commons and those of the past is that the new ones are immaterial and global. The model for P2P exchange seems to be of autonomous agents relating and forming new communities not based on membership in an originary cultural group. Given a global distribution, how do local, cultural factors play into the model of globalized distributed networks? How does P2P accommodate cultural specificity, especially specificity with deep historical roots; and how does that accommodate the development of new culture, art?

In my view, the digital commons reconfigure both the local and the global. I think we can see at least three levels, i.e. a local level, where local commons are created to sustain local communities, see for example the flowering of neighbourhood sharing systems; then there are global discourse communities, but they are constrained by language; so rather than national divisions, which still exist but erode somewhat as a limitation for discourse exchange, there is a new para-global level around shared language. At each level though, cultural difference has to be negotiated and taking into account. If there is no specific effort at diversity and inclusion, then affinity-based communities reproduce existing hierarchies. For example, the free software world is still dominated by white males. Without specific efforts to make a dominant culture, which has exclusionary effects, adaptive to inclusion, deeper participation is effectively discouraged. Of course, as the dominant culture may not be sufficiently sensitive, it is still incumbent on minoritarian cultures to make their voice and annoyances heard. Obviously, each culture will have to go through an effort to make their culture ‘available’ through the networks, but I think the specific role of artists, now operating more collectively and collaboratively than before, is to experiment with new aesthetic languages, so that non-conceptual truths can be communicated.

The innovation I see as most important though is in terms of the globa-local, i.e. a relocalization of production, but within the context of global open design and knowledge communities, probably based on language. I also see a distinct possibility for a new form of global organization, i.e. the phyle I mentioned earlier, as fictionalized in Neal Stephenson’s The Diamond Age and operationalized by lasindias.net. These are transnational value communities that created enterprises to sustain their livelihoods.

I see the key challenge, not just to develop ‘relationality’ between individuals, as social networks are doing very well, but to develop new types of community, such as the phyle, which are not just loose networks, but answer the key question of sustainability and solidarity.

In terms of culture, what I see developing is a new transnational culture, based on value and discourse communities, based on language, that are neither local, nor national, nor fully cosmopolitan, but ‘trans-national’.

And the creative relationships between artists can in some sense be a model for this?

Artists have been precarious in almost all periods of history, and their social condition reflects what is now very common for ‘free culture’ producers today, so studying sustainability and livelihood practices of artist communities seems to me to be a very interesting lead in terms of linking with previous historical experience. I understand that artists now have increasingly collaborative practices and forms of awareness. Unfortunately, my own knowledge of this is quite limited so this is really also an open appeal for qualified researchers to link art historical forms of livelihood, with current peer production. In some ways, we are all now becoming precarious artists under neoliberal cognitive capitalism!

Co-published by Furtherfield and The Hyperliterature Exchange.

Last October I received an e-mail headed “Introducing Vook”:

The Vook Team is pleased to announce the launch of our first vooks, all published in partnership with Atria, an imprint of Simon & Schuster, Inc. These four titles… elegantly realize Vook’s mission: to blend a book with videos into one complete, instructive and entertaining story.

The e-mail also included a link to an article about Vooks in the New York Times:

Some publishers say this kind of multimedia hybrid is necessary to lure modern readers who crave something different. But reading experts question whether fiddling with the parameters of books ultimately degrades the act of reading…

Note the rather loaded use of the words “lure”, “crave”, “fiddling” and “degrades”. The phraseology seems to suggest that modern readers are decadent and listless thrill-seekers who can scarcely summon the energy to glance at a line of text, let alone plough their way through an entire book. If an artistic medium doesn’t offer them some form of instant gratification – glamour, violence, excitement, pounding beats, lurid colours, instant melodrama – then it simply won’t get their attention. But publishers have a moral duty not to pander to their readers’ base appetites: the New York Times article ends by quoting a sceptical “traditional” author called Walter Mosley –

“Reading is one of the few experiences we have outside of relationships in which our cognitive abilities grow,” Mr. Mosley said. “And our cognitive abilities actually go backwards when we’re watching television or doing stuff on computers.”

In other words, reading from the printed page is better for your mental health than watching moving pictures on a screen: an argument which has been resurfacing in one form or another at least since television-watching started to dominate everyday life in the USA and Europe back in the 1950s. To some extent this is the self-defence of a book-loving and academically-inclined intelligensia against the indifference or hostility of popular culture – but in the context of a discussion of Vooks, it can also be interpreted as a cry of irritation from a publishing industry which is increasingly finding the ground being scooped from under its feet by younger, sexier, more attention-grabbing forms of entertainment.

The fact that the Vook publicity-email links to an article which is generally rather sniffy and unfavourable about the idea of combining video with print no doubt reflects a belief that all publicity is good publicity – but it is also indicative of the publishing industry’s mixed attitudes towards the digital revolution. On the whole, up until recently, they have tended to simply wish it would just go away; but they have also wished, sporadically, that they could grab themselves a piece of the action. But those publishers who have attempted to ride the digital surf rather than defy the tide have generally put their efforts and resources into re-packaging literature instead of re-thinking it: and the evidence of this is that the recent history of the publishing industry is littered with ebooks and e-readers, whereas attempts to exploit the digital environment by combining text with other media in new ways have generally been ignored by the publishing mainstream, and have therefore remained confined to the academic and experimental fringes.

The publishing industry’s determination to make the digital revolution go away by ignoring it has been even more evident in the UK than in the US. The 1997 edition of The Writers’ and Artists’ Yearbook, for example, contains no references to ebooks or digital publishing whatsoever, although it does contain items about word-processing and dot-matrix printers. On the other hand, Wired magazine was already publishing an in-depth article about ebooks in 1998 (“Ex Libris” by Steve Silberman, http://www.wired.com/wired/archive/6.07/es_ebooks.html) which describes the genesis of the SoftBook, the RocketBook and the EveryBook, as well as alluding to their predecessor, the Sony BookMan (launched in 1991). Even in the USA, however, enthusiasm for ebooks took a tremendous knock from the dot-com crash of 2000. Stephen Cole, writing about ebooks in the 2010 edition of The Writers’ and Artists’ Yearbook, summarises their history as follows:

Ebook devices first appeared as reading gadgets in science fiction novels and television series… But it was not until the late 1990s that dedicated ebook devices were marketed commercially in the USA… A stock market correction in 2000, combined with the generally poor adoption of downloadable books, sapped all available investment capital away from internet technology companies, leaving a wasteland of broken dreams in its wake. Over the next two years, over a billion dollars was written off the value of ebook companies, large and small.

After 2000, there was a widely-held view (which I shared) that the ebook experiment had been tried and failed: paper books were a superb piece of technology, and perhaps a digital replacement for them was simply never going to happen. There were numerous problems with ebooks: too many different and incompatible formats, too difficult to bookmark, screens hard to read in direct sunlight, couldn’t be taken into the bath, etc. But ebooks have always had a couple of big points in their favour – you can store hundreds on a computer, whereas the same books in paper form demand both physical space and shelving, you can find them quickly once you’ve got them, and they’re cheap to produce and deliver. Despite the dot-com crash and general indifference of the reading public, publishers continued to bring out electronic editions of books, and a small but growing number of people continued to download them.

Things really started to change with the launch of Amazon’s Kindle First Generation in 2007. It sold out in five and a half hours. With the Kindle, the e-reader went wireless. Instead of having to buy books on CDs or cartridges and slot them into hand-helds, or download them onto computers and then transfer them, readers using the Kindle could go right online using a dedicated network called the Whispernet, and get themselves content from the Kindle store.

Despite this big step forward, the Kindle was still an old-school e-reader in some respects: it had a black and white display, and very limited multimedia capabilities. The Apple iPad changed the rules again when it was launched in April 2010. The iPad isn’t just an e-reader – it’s “a tablet computer… particularly marketed for consumption of media such as books and periodicals, movies, music, and games, and for general web and e-mail access” (Wikipedia, http://en.wikipedia.org/wiki/I-pad). Its display screen is in colour, and it can play MP3s and videos or browse the Web as well as displaying text. For another thing, it goes a long way towards scrapping the rule that each e-reader can only display books in its own proprietary format. The iPad has its own bookstore – iBooks – but it also runs a Kindle app, meaning that iPad owners can buy and display Kindle content if they wish.

It seems we may finally be reaching the point where ebooks are going to pose a genuine challenge to print-and-paper. Amazon have just announced that Stieg Larsson’s The Girl with the Dragon Tattoo has become the first ebook to sell more than a million copies; and also that they are now selling more copies of ebooks than books in hardcover.

It is certainly also significant that the past couple of years have seen a sudden upsurge of interest in the question of who owns the rights over digitised book content, and whether ordinary copyright laws apply to online text – a debate which has been brought to the boil by a court case brought against Google in 2005 by the Authors Guild of America.

In 2002, under the title of “The Google Books Library Project”, Google began to digitise the collections of a number of university libraries in the USA (with the libraries’ agreement). Google describes this project as being “like a card catalogue” – in other words, primarily displaying bibliographic information about books rather than their actual contents. “The Library Project’s aim is simple”, says Google: “make it easier for people to find relevant books – specifically, books they wouldn’t find any other way such as those that are out of print – while carefully respecting authors’ and publishers’ copyrights.” They do concede, however, that the project includes more than bibliographic information in some instances: “If the book is out of copyright, you’ll be able to view and download the entire book.” (http://books.google.com/googlebooks/library.html)

In 2004 Google launched Book Search, which is described as “a book marketing program”, but structured in a very similar way to the Library Project: displaying “basic bibliographic information about the book plus a few snippets”; or a “limited preview” if the copyright holder has given permission, or full texts for books which are out of copyright – in all cases with links to places online where the books can be bought. Interestingly, my own book Outcasts from Eden is viewable online in its entirety, although it is neither out of copyright nor out of print, which casts a certain amount of doubt on Google’s claim to be “carefully respecting authors’ and publishers’ copyrights”.

In 2005 the Authors Guild of America, closely followed by the Association of American Publishers, took Google to court on the basis that books in copyright were being digitised – and short extracts shown – without the agreement of the rightsholders. Google suspended its digitisation programme but responded that displaying “snippets” of copyright text was “fair use” under American copyright law. In October 2008 Google agreed to pay $125 million to settle the lawsuit – $45.5 million in legal fees, $45 million to “rightsholders” whose rights had already been infringed, and “$34.5 million to create a Book Rights Registry, a form of copyright collective to collect revenues from Google and dispense them to the rightsholders. In exchange, the agreement released Google and its library partners from liability for its book digitization.” (http://en.wikipedia.org/wiki/Google_Book_Search_Settlement_Agreement). The settlement was queried by the Department of Justice, and a revised version was published in November 2009, which is still awaiting approval at the time of writing.

The settlement is a complex one, but its most important provision as regards the future of publishing seems to be that “Google is authorised to sell online access to books (but only to users in the USA). For example, it can sell subscriptions to its database of digitised books to institutions and can sell online access to individual books.” 63% of the revenue thus generated must be passed on to “rightsholders” via the new Registry. “The settlement does not allow Google or its licensees to print copies of books in copyright.” (“The Google Settlement” by Mark Le Fanu, The Writers’ and Artists’ Yearbook 2010, pp. 631-635).

Google, it will be noted, are now legally within their rights to continue their digitisation programme. This means they don’t have to ask anyone’s permission before they digitise work. If authors or publishers would prefer not to be listed by Google it is up to them to lodge an objection online. Google would argue that in launching their Library Project and Books Search they have merely been seeking to make their search facilities more complete, and thus to “make it easier for people to find relevant books” – but whether or not they have been deliberately plotting their course with wider strategic issues in mind, the end result has been to make them the biggest single player – almost the monopoly-holder – where digital book rights are concerned. As a reflection of this, an organisation called the Open Book Alliance has been set up to oppose the settlement, supported by the likes of Amazon and Yahoo: “In short,” their website claims, “Google’s book digitization strategy in the U.S. has focused on creating an impenetrable content monopoly that violates copyright laws and builds an unfair and legally insurmountable lead over competitors.” (http://www.openbookalliance.org/)

Whatever the pros and cons of the Google Settlement, it has undoubtedly helped to focus the minds of writers and publishers alike on the question of digital rights. Copyright laws and publishers’ contracts were designed to deal with print and paper, and until very recently there has been almost no reference at all to electronic publication. Writers who have agreed terms with a publisher for reproduction of their work in print have theoretically been at liberty to re-publish the same work on their own websites, or perhaps even to collect another fee for it from a digital publisher; and conversely, publishers who have signed a contract to bring out an author’s work in print have sometimes felt free to reproduce it electronically as well, without asking the writer’s permission or paying any extra money.

But things are beginning to change. A June 2010 article in The Bookseller notes that Andrew Wylie, one of the most prestigious of UK literary agents, “is threatening to bypass publishers and license his authors’ ebook rights directly to Google, Amazon or Apple because he is unhappy with publishers’ terms.” This is partly because he believes electronic rights are being sold too cheaply to the likes of Apple: “The music industry did itself in by taking its profitability and allocating it to device holders… Why should someone who makes a machine – the iPod, which is the contemporary equivalent of a jukebox – take all the profit?” Clearly, electronic rights are going to be taken much more seriously from now on.

Further indications that authors, publishers and agents are beginning to wake up and smell the digital coffee can be found in the latest editions of The Writers’ and Artists’ Yearbook and The Writers’ Handbook. For those who are unfamiliar with them, these annual publications are the UK’s two main guides to the writing industry. The 2010 edition of The Writer’s Handbook opens with a keynote article from the editor, Barry Turner, entitled “And Then There was Google”. As the title indicates, its main subject is the Google settlement and its implications – but its broader theme is that the book trade has been ignoring the digital revolution for too long, and can afford to do so no longer:

In the States… sales of e-books are increasing by 50 per cent per year while conventional book sales are static. An indication of what is in store was provided at last year’s Frankfurt Book Fair where a survey of book-buying professionals found that 40 per cent believe that digital sales, regardless of format, will surpass ink on paper within a decade.

The Writers’ and Artists’ Yearbook is more conservative in tone, but if anything its coverage is more in-depth. It has an entire section titled “Writers and Artists Online”, which leads with an article about the Google settlement. In addition there are articles on “Marketing Yourself Online”, “E-publishing” and “Ebooks”. Even in the more general sections of the Yearbook there is a widespread awareness of how digital developments are affecting the book trade. For example, there is a review (by Tom Tivnan) of the previous twelve months in the publishing industry, which acknowledges the importance not just of ebooks but print-on-demand:

Amazon’s increasing power underscores how crucial the digital arena is for publishing… Ebooks remain a miniscule part of the market,… yet publishers and booksellers say they are pleasantly surprised at the amount of sales… And it is not all ebooks. The rise of print-on-demand (POD) technology (basically keeping digital files of books to be printed only when a customer orders it) means that the so-called “long tail” has lengthened, with books rarely going out of print… POD may soon be coming to your local bookshop. In April 2009, academic chain Blackwell had the UK launch of the snazzy in-store Espresso POD machine, which can print a book in about four minutes…

There is also an article about “Books Published from Blogs” (by Scott Pack):

Agents are proving quite proactive when it comes to bloggers. Some of the more savvy ones are identifying blogs with a buzz behind them and approaching the authors with the lure of a possible book deal… Many bestsellers in the years to come will have started out online.

Most of the emphasis in these articles falls on the impact which digital developments are having on the marketing of books rather than the practice of writing itself. But now and again there are signs of a creeping awareness that digitisation may actually change the ways in which our literature is created and consumed. In The Writer’s Handbook, Barry Turner attempts to predict how the digital environment may affect the practice of writing in the coming years:

Those of us who make any sort of livng from writing will have to get used to a whole new way of reaching out to readers. Start with the novel. Most fiction comes in king-sized packages… Publishers demand a product that looks value for money… But all will be different when we get into e-books. There will be no obvious advantage in stretching out a novel because size will not be immediately apparent… Expect the short story to make a comeback… The two categories of books in the forefront of change are reference and travel. Their survival… is tied to a combination of online and print. Any reference or travel book without a website is in trouble, maybe not now, but soon.

Scott Pack’s article on “Books Published from Blogs” tends to focus on those aspects of a blog which may need remoulding to suit publication in book form; but an article by Isabella Pereira entitled “Writing a blog” is more enthusiastic about the blog as a form in its own right:

The glory of blogging lies not just in its immediacy but in its lack of rules… The best bloggers can open a window into private worlds and passions, or provide a blast of fresh air in an era when corporate giants control most of our media… Use lots of links – links uniquely enrich writing for the web and readers expect them… What about pictures? You can get away without them but it would be a shame not to use photos to make the most of the web’s all-singing, all-dancing capacities.

Even here, however, the advice stops short of videos, sound-effects or animations. Another article in The Writers’ and Artists’ Handbook (“Setting up a Website”, by Jane Dorner) specifically forbids the use of animations: