Jonas Lund’s “The Fear Of Missing Out” (2013) is a series of gallery art objects made by the artist following the instructions of a piece of software they have written. It has gained attention following a Huffington Post article titled “Controversial New Project Uses Algorithm To Predict Art “.

Art fabricated by an artist following a computer-generated specification is nothing new. Prior to modern 2D and 3D printing techniques, transcribing a computer generated design into paint or metal by hand was the only way to present artworks that pen plotters or CNC mills couldn’t capture. But a Tamagotchi-gamer or Amazon Mechanical Turk-style human servicing of machine agency where a program dictates the conception of an artwork for a human artist to realize also has a history. The principles involved go back even further to the use of games of chance and other automatic techniques in Dada and Surrealism.

What is novel about The Fear Of Missing Out is that the program dictating the artworks is doing so based on a database derived from data about artworks, art galleries, and art sales. This is the aesthetic of “Big Data“, although is not a big dataset by the definition of the term. Its source, and the database, are not publicly available but assuming it functions as specified the description of the program in the Huffington Post article about it is complete enough that we could re-implement it. To do so we would scrape Art Sales Index and/or Artsy and pull out keywords from entries to populate a database keyed on artist, gallery and sales details. Then we would generate text from those details that match a desired set of criteria such as gallery size and desired price of artwork.

What’s interesting about the text described in the Huffington Post article is that it’s imperative and specific: “place the seven minute fifty second video loop in the coconut soap”. How did the instruction get generated? Descriptions of artworks in artworld data sites describe their appearance and occasionally their construction, not how to assemble them. If it’s a grammatical transformation of scraped description text that fits the description of the project, but if it’s hand assembled that’s not just a database that has been “scraped into existence”. How did the length of time get generated? If there’s a module to generate durations that doesn’t fit the description of the project, but if it’s a reference to an existing 7.50 video it does.

The pleasant surprises in the output that the artist says they would not have thought of but find inspiring are explained by Edward de Bono-style creativity theory. And contemporary art oeuvres tend to be materially random enough that the randomness of the works produced looks like moments in such an oeuvre. Where the production differs both from corporate big data approaches and contemporary artist-as-brand approaches is that production is not outsourced. Lund makes the art that they use data to specify.

Later, the Huffington Post article mentions the difficulty of targeting specific artists. A Hirst artwork specification generator would be easy enough to create for artworks that resemble his existing oeuvre. Text generators powered by markov chains were used as a tool for parodying Usenet trolls, and their strength lies in the predictability of the obsessed. Likewise postmodern buzzword generators and paper title generators parody the idees fixes of humanities culture.

The output of such systems resembles the examples that they are derived from. Pivoting to a new stage in an artist’s career is something that would require a different approach. It’s possible to move, logically, to conceptual opposites using Douglas Hofstadter’s approaches. In the case of Hirst, cheap and common everyday materials (office equipment) become expensive and exclusive ones (diamonds) and the animal remains become human ones.

This principle reaches its cliometric zenith in Colin Martindale’s book “The Clockwork Muse: The Predictability of Artistic Change”. It’s tempting to dismiss the idea that artistic change occurs in regular cycles as the aesthetic equivalent of Kondratieff Waves as Krondatieff Waves are dismissed by mainstream economics. But proponents of both theories claim empirical backing for their observations.

In contrast to The Fear Of Missing Out’s private database and the proprietary APIs of art market sites there is a move towards Free (as in freedom) or Open Data for art institutions. The Europeana project to release metadata for European cultural collections as linked open data has successfully released data from over 2000 institutions across the EU. The Getty Foundation has put British institutions that jealously guard their nebulously copyrighted photographs of old art to shame by releasing almost 5000 images freely. And most recently the Tate gallery in the UK has released its collection metadata under the free (as in freedom) CC0 license.

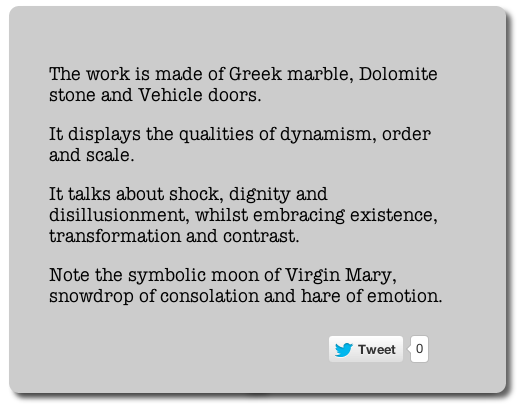

Shardcore’s “Machine Imagined Artworks” (2013) uses the Tate collection metadata to make descriptions of possible artworks. Compared to the data-driven approach of The Fear Of Missing Out, Machine Imagined Artworks is a more traditional generative program using unconstrained randomness to choose its materials from within the constrained conceptual space of the Tate data’s material and subjects ontologies.

Randomness is ubiquitous but often frowned upon in generative art circles. It gives good results but lacks intention or direction. Finding more complex choice methods is often a matter of rapidly diminishing returns, though. And Machine Imagined Artworks makes the status of each generated piece as a set of co-ordinates in conceptual space explicit by numbering it as one of the 88,577,208,667,721,179,117,706,090,119,168 possible artworks that can be generated from the Tate data.

Machine Imagined Artworks describes the formal, intentional and critical schema of an artwork. This reflects the demands placed on contemporary art and artists to fit the ideology both of the artworld and of academia as captured in the structure of the Tate’s metadata. It makes a complete description of an artwork under such a view. The extent to which such a description seems incomplete is the extent to which it is critical of that view.

We could use the output of Machine Imagined Artworks to choose 3D models from Thingiverse to mash-up. Automating this would remove human artists from the creative process, allowing the machines to take their jobs as well. The creepy fetishization of art objects as quasi-subjects rather than human communication falls apart here. There is no there there in such a project, no agency for the producer or the artwork to have. It’s the uncanny of the new aesthetic.

Software that directs or displaces an artist operationalises (if we must) their skills or (more realistically) replaces their labour, making them partially or wholly redundant. Dealing in this software while maintaining a position as an artist represents this crisis but does not embody it as the artist is still employed as an artist. Even when the robots take artists jobs, art critics will still have work to do, unless software can replace them as well.

There is a web site of markov chain-generated texts in the style of the Art & Language collective’s critical writing at http://artandlanguage.co.uk/, presumably as a parody of their distinctive verbal style. Art & Language’s painting “Gustave Courbet’s ‘Burial at Ornans’ Expressing…” (1981) illustrates some of the problems that arbitrary assemblage of material and conceptual materials cause and the limitations both of artistic intent and critical knowledge. The markov chain-written texts in their style suffer from the weakness of such approaches. Meaning and syntax evaporate as you read past the first few words. The critic still has a job.

Or do they? Algorithmic criticism also has a history that goes back several decades, to Gips and Stiny’s book “Algorithmic Aesthetics” (1978). It is currently a hot topic in the Digital Humanities, for example with Stephen Ramsay’s book “Reading Machines: Toward an Algorithmic Criticism” (2011). The achievements covered by each book are modest, but demonstrate the possibility of algorithmic critique. The problem with algorithmic critique is that it may not share our aesthetics, as the ST5 antenna shows.

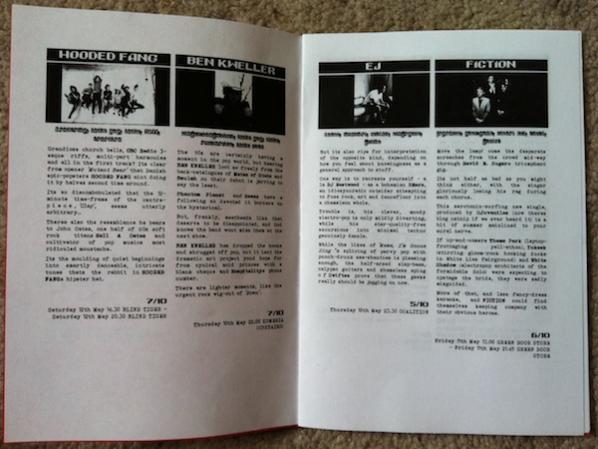

Shardcore’s “Cut Up Magazine” (2012) is a generative critic that uses a similar strategy to “The Fear Of Missing Out”. It assembles reviews from snippets of a database of existing reviews using scraped human generated data about the band such as their name, genre, and most popular songs. Generating the language of critique in this way is subtly critical of its status and effect for both its producers and consumers. The language of critique is predictable, and the authority granted to critics by their audience accords a certain status to that language. Taken from and returned to fanzines, Cut Up Magazine makes the relationship between the truth of critique, its form, and its status visible to critique.

We can use The Fear Of Missing Out-style big data approaches to create critique that has a stronger semantic relationship to its subject matter. First we scrape an art review blog to populate a database of text and images. Next we train an image classifier (a piece of software that tells you whether, for example, an image contains a Soviet tank or a cancer cell or not) and a text search engine on this database. Then we use sentiment analysis software (the kind of system that tells airlines whether tweets about them are broadly positive or negative) to generate a score of one to five stars for each review and store this in the database.

We can now use this database to find the artworks that are most similar in appearance and description to those that have already been reviewed. This allows us to generate a critical comment about them and assign them a score. Given publishing fashion we can then make a list of the results. The machines can take the critic’s job as well, as I have previously argued.

What pieces like The Fear Of Missing Out and Machine Imagined Artworks make visible is an aspect of How Things Are Done Now and how this affects everyone, regardless of the nature of their work. This is “big data being used to guide the organization”. To regard such projects simply as parody or as play acting is to take a literary approach to art. But art doesn’t need to resolve such ontological questions in order to function, and may provide stronger affordances to thought if it doesn’t. What’s interesting is both how much such an approach misses and how much it does capture. As ever, art both reveals and symbolically resolves the aporia of (in this case the Californian) ideology.

The text of this review is licenced under the Creative Commons BY-SA 3.0 Licence.

Featured image: Still from performance of Structure M-11

You would measure time the measureless and the immeasurable.

You would adjust your conduct and even direct the course of your spirit according to hours and seasons. Of time you would make a stream upon whose bank you would sit and watch its flowing. – Kahlil Gibran

Algorithms only really come alive in the temporal time-frames that they move through. Their existence depends on being able to move freely along time’s arrow, unfolding and expanding out in to the universe, or reversing themselves backwards into a finite point. Every form and structure that the universe creates is the result of a single step along that pathway and we’re only ever observing it at a single moment. Those geological steps can take millions of years to unfold and we can only ever really look back and see the steps that happened before we chose to observe them. Computational algorithms break down that slow dripping of nature’s possibilities and allow us to become time-travellers, stepping into any point that we choose to.

Paul Prudence is a performer and installation artist who works with computational, algorithmic and generative environments, developing ways to reflect his interest in patterns, nature and the mid-way point between scientific research and artistic pursuit. The outputs from this research are near cinematic, audio-visual events. Prudence’s creative work, and his blog, Dataisnature (kept since October 2004), explores a number of creative potentials as well as documentating the creative and scientific research work of others that he finds of interest. As the blog’s bio states:

“Dataisnature’s interest in process is far and wide reaching – it may also include posts on visual music, parametric architecture, computational archaeology, psychogeography and cartography, experimental musical notational, utopian constructs, visionary divination systems and counter cultural systems.”

Paul himself feels Dataisnature, and his other blogs, are by their very nature ordering systems, trying to create some kind of structure on information. “Yes, it’s true [that they are ordering systems], but the ordering is sometimes a little bit oblique. I am not interested in ordering systems such as categories or tags, for example, as each blog post has the potential to generate many of its own categories.”

The blog and perhaps all blogs, shouldn’t be an end in themselves then? Should they be a starting point for a deeper investigation? “Well, I’m more interested in substrate and sedimentary structuring – specific fields existing in layers and sometimes overlapping and interacting rhizomatically.”

Blogging for Paul and many bloggers who don’t operate within the ‘monetization of blogging’ sphere that has grown up in the past few years could almost be considered a documentation and ordering process for the creative process. The process and interaction between the theory and the blog as textual artefact becomes quite complex. As does the theory and creative output of the blogger. Paul would argue that this isn’t always something that can be even as straightforward as theory to practice though.

“The posts at Dataisnature are not confined to theoretical relationships between art and science projects, but also take into account metaphorical ones. I never wanted the posts to be so pinned down that they disable the opportunity to make entirely new connections at any level.”

So the chance to see what happens inbetween strict disciplines and an openness to the potentials that may arise out of relaxing the barriers? Shouldn’t that be the way that everything else that is ‘not of academia’ operates anyway? And for that matter, outside of the possibilities of arts/science/research funding.

“I applied the term ‘recreational research’ to Dataisnature in its early days,” Paul explains. “This is still to some degree important – the notion that research doesn’t have to be tied down by the prospect of peer review or academic formatting. This kind of interdisciplinary research can be highly addictive – its the new sport of the internet age. It can generate blogs that become chaotic repositories of interconnectedness – linearity becomes infected with cut-up and collage. In my own mind I have an idea of what Dataisnature is trying to say but I get people approaching me with completely different, and amusing theories of what they believe the blog is about.”

In digital arts (or let us call it digital creativity, to avoid the complexity of art versus design versus technology) the breakdown between the equipment used and the research of the creator has become almost at times indistinguishable. A painter is often only one step away from being a chemist, a sculptor closer to an engineer than a painter. The tools used define and form some of the output. Digital creativity only makes this more implicit. So when using technologies and researching, the scientist and the creative often walk hand-in-hand towards the finished artefact. As Prudence says: “Collaboration among artists and scientists exists through time as well as space.”

“A great part of an artist’s task is to be a researcher. It’s important to remember that any idea you have has already been tackled in the past with a different (want to avoid the term lesser) technology.”

The blogging process offers a chance to gather information and allow some of the artist’s own influences and present interests to manifest themselves into a rough-hewn structure. “For me, blogging facilitates a medium for an archaeology of aesthetics, technology and conceptuality. All this fragmented information is gathered then reconstituted, and fed back into the artistic practice. Of course my personal work blog is more about supplying supplementary material to anyone interested in my work.”

Taking an arguably typical example of Paul Prudence’s work, for example Structure-M11, the sense of a becoming and developing is in the way it attempts to reconnect with what (for want of a better phrase) could possibly be called our lost industrial heritage.

Looking through Prudence’s flickr stream documenting the research trip, there are numerous industrial landscapes empty of human life, where only the machines have been allowed to remain, static and poised, ready to begin work again. If only someone would employ them. These machines perform simple tasks, but they do it elegantly, time after time after time, never complaining and never asking for any recognition. Perhaps that’s why it is so easy to abandon them? And these machines are not only a monument to the way we discard unwanted technologies, they also reflect the changing fortunes of the town as it has moved from production-based economy to one centred mainly on tourism and smaller businesses. It is fitting in a way that the soundscapes and visuals that Prudence has brought to life from these landscapes have such a contemporary, sci-fi industrial feel to them. As though the clean, slick lines and geometric perfection had emerged, phoenix-like, from the unbearably hot, oil soaked environments of the factories and the monotonous repetition of working within them.

The soundtrack that accompanies the performance was made from field recordings at the site. From these, Prudence generated real-time visuals that reflected some of the sonic activations and echoes throughout these landscapes. The final pieces look like ‘robotic origami contraptions.’ The steady throb and crash of the audio reflects the repetition of the machine and its operator’s lives while also suggesting some of the dehumanising effects working in a factory can have on a person. There’s also the beauty, of course, if you shift your own perception a few degrees away from the machines, there is always a window looking out at a natural landscape. And those same slick, geometric shapes of the machines begin to reflect some of the elegance of the world of nature. Nature, like humanity, loves to repeat itself infinitely until something breaks that pattern. Isn’t that a fundamental part of mutation and evolution? Structure-M11 seems to be constantly mutating and growing new rhizomes, but nothing complete ever emerges. Paul Prudence’s work isn’t here to save us from the monotony of the machines though, its task is to remind us of how important nature is to our lives, no matter how entangled in the machine those lives may begin to feel.

Prudence’s interest in the natural spaces emerges from his own theory-based interests. As he says, “My interest in generative systems and procedural-based methodologies in art lead to a way of seeing landscape formations and geological artefacts as a result of ‘earth-based’ computations.”

“The pattern recognition part of the brain draws analogies between spatio-temporal systems found in nature and ones found in computational domains – they share similar patterns. I began to think of the forms found in natural spaces more and more in terms of the aeolian protocols, metamorphic algorithms and hydrodynamic computations that created them.”

“Some of these pan-computational routines run their course over millions of years, some are over in a microsecond, yet the patterns generated can be amazingly similar. I like the fact that when I go walking in mountains my mind switches to [the] subject of process, computation and doWhile() loops inspired by the geological formations I come across.”

This connection and flowing from one space to the other, gives the viewer the feeling that they recognise the shapes and patterns from something they’ve seen before. Attending a performance of Prudence’s work might make you feel as though you’ve been to one already. But it’s just the reconnection of interconnection that you’d be experiencing. And that’s always a good place to start, when experiencing any artwork, isn’t it?

21 Sept 2012

Scopitone Festival, Nantes, France.

24 Sept 2012

Immerge @ SHO-ZYG, London

17-25 October 2012

VVVV Visual Music Workshops at at Playgrounds 2012, National Taipei University of Art, Taipei & National Museum of Art, Taichung, Taiwan

Prank sombody with the fake Windows 10 upgrade screen which never ends. Open the site in a web browser and go full screen with the F11 key.

Decode: Digital Design Sensations

The Victoria and Albert museum, London

8 December 2009 – 11 April 2010

Decode: Digital Design Sensations at the Victoria and Albert Museum (V&A) brings the state of the art in art computing to a venerable cultural institution. Everything from the posters and banners around town to the hoardings on the entrance to the gallery containing the show makes it clear that Decode is a serious cultural event. It’s a spectacle, a dark space alongside the well-lit galleries of the V&A, drawing you in with points of light and distant sounds. The crowds are reassuring for the popularity of art computing yet disconcerting for the experience of the art at times.

Don’t forget to ask for a catalogue as you hand over your ticket on the way in. The sponsor’s foreword should raise a smile to anyone familiar with the software industry, but the introductory essay (which only occasionally becomes the latest casualty of the confusion that the word “open” shows), the details of works in the show and the interviews with Golan Levin and Daniel Rozin are all very informative. The catalogue also draws attention to Karsten Schmidt’s specially commissioned graphic identity for the show, which can be downloaded and modified as Free Software.

The show is divided into three sections. Generative art, data visualisation, and interactive multimedia (or, as the catalogue puts it – Code, Network and Interactivity).

The generative artworks suffer in comparison to the other pieces by being mostly small-scale screen based pieces. However appealing the images are on the screen (and they are) they cannot compete with the projections and three dimensional installations of the other sections. With the exception of an interactive version of the video to Radiohead’s House of Cards by James Frost and Aaron Koblin, the work does not refer to the human figure or to the viewer, another feature of many of the most popular pieces in the other sections. And apart from Matt Pyke’s typographic totem pole, my other favourite piece of the section, the work is calm. Beautiful, but calm. It would reward prolonged contemplation in a quieter environment and might benefit from presentation on a larger scale to better bring out its aesthetic qualities. But this is not that environment, and that presentation is not given to the work here.

The data visualisation section has more projections and custom hardware, and also has more human interest. The emotion of We Feel Fine by Jonathan Harris and Sep Kamvar, the surveillance state expose of Stanza’s Sensity, CCTV assemblages, Make-Out, the porn-inspired kissing figures of Rafael Lozano-Hemmer and the social data visualizations of Social Collider by Sascha Pohflepp & Karsten Schmidt are sometimes less visually sophisticated than some of the generative pieces, but address current social and technological developments more directly. The world wide web is twenty years old, it has drawn in the mass media and media feeds from the real world, and many of its users produce and encounter gigabytes of data over time. Representing and exploring that technological and cultural environment is something that art can do and that art is particulalry well placed to do given the importance of the aesthetics of interfacing and visualisation to the contemporary web.

The interactive multimedia section contained the real crowd pleasers of the show, although some of the pieces had “out of order” notices on them when I visited. Yoke’s virtual reality Dandelion Clock controlled by a hairdryer, Ross Phillips’s Videogrid, a physically interactive group portrait and Daniel Rozin’s Weave Mirror, a cybernetic sculpture-cum-display-screen. They all give their audience an aesthetic experience that briefly changes their relationship to the world, and in some cases shows them themselves in that new relationship. Interactive multimedia installation is clearly due a resurgence.

The V&A have presented Decode as a design show. I was struck by this framing of the work when I visited the show, and most of the people I have spoken to about the show since have commented on it as well. Many of the participants are graphic designers or work in design as well as art and education, but much of the work would be poorly served by being regarded as design rather than as art. It is not advertising, or presentation of anything other than itself for the most part. Where the work is information design, the information has been chosen by the designer. That said the art computing MA I attended as a student had to be called a “design” course to get funding, so possibly this is a constant. And the V&A have done a great job of presenting the work and letting it speak for itself to the visiting crowds.

This isn’t quite Cybernetic Serendipity 2.0. It excludes the conceptually and performatively, rougher edges of contemporary art computing. But these exclusions are largely practical; there is no livecoding and there are no email or self-contained web browser-based works. Some of the work is strikingly but subtly political in its representation of current social and political trends such as surveillance, online pornography and the death of privacy.

The V&A have done the conventional artworld and the general public a great service by presenting Decode. The show contains enough big and up-and-coming names in art computing and digital design to provide a convincing if necessarily incomplete survey of the contemporary scene. Decode also serves an important role for artists and students with an interest in or a stake in art computing by focussing attention on what others have achieved that can be built on.

Decode shows the achievements of the personal computing and web eras of art computing becoming established with and recognized by the broader arts establishment. The danger is that the story will finish triumphally here. Processing has become the new Shockwave, and particle systems and shape grammars are not enough in themselves for long without an accompanying progressive and deeper deeper engagement with the aesthetics and history of art, technology, wider society, or all three. Art computing is not immune to technical and aethetic conservatism. To avoid this I think that it needs to intensify, to become more like itself; to become more beautiful, to tackle larger datasets, to become more interactive. In other words, it needs to build on the achievements gathered together and presented here.

http://www.vam.ac.uk/exhibitions/future_exhibs/Decode/

The text of this review is licenced under the Creative Commons BY-SA 3.0 Licence.

Mark Napier’s Venus 2.0

Angela Ferraiolo

February 5, 2010

“Now that I’m done’ I find the artwork disturbing. It freaks me out. Maybe I’ll do landscapes for a while to detox.” — Mark Napier

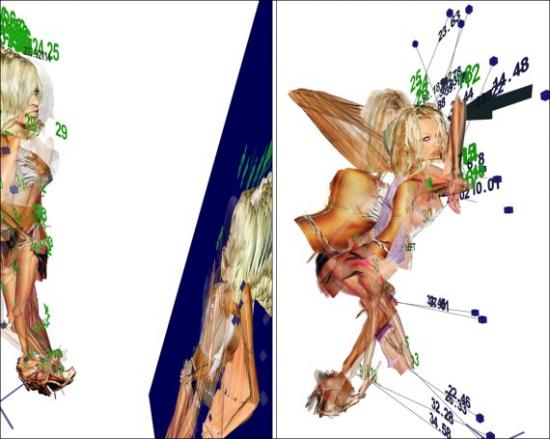

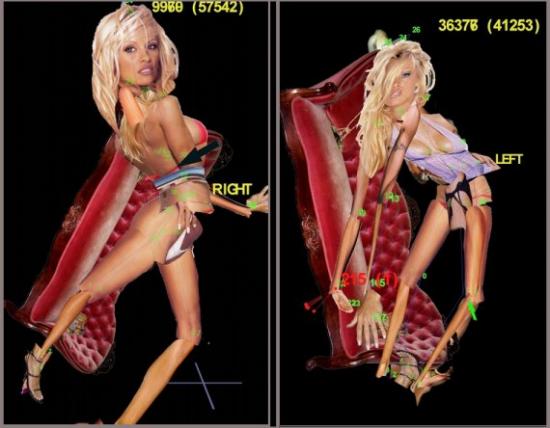

Artist Mark Napier, well-known for the net classics Shredder, Riot, and Digital Landfill, recently exhibited his latest work Venus 2.0 at the DAM Gallery in Berlin. A sort of portrait via data collection Venus 2.0 uses a software program to cross the web and collect various images of the American television star Pamela Anderson. These images are then broken up, sorted by body parts put back together and — as this inventory of fragments cycles onscreen, a leg, a leg another leg — the progressive offset of each rendering of Anderson’s head, arms, and torso makes her reconstructed figure twist, turn and jerk like a puppet. It’s odd looking. Even her creator is a little afraid of her:

“I have to say’ if I ever meet Pamela Anderson in real life I think I’ll completely freak out and run away. I don’t think I could have a conversation with the woman after this project … I started with a sympathetic view of the actual Pamela Anderson. She is part of the spectacle of sexuality in contemporary media. She’s caught in that ‘desiring machine’ as much as any other human’ probably even more so. She has surgically modified her body in order to have greater leverage in the media. Does this put her in control? I think not.”

Napier’s project doesn’t answer or intend to answer the question of who is in control either, but it plays with the possibilities. Although I wasn’t able to see the portrait at its exhibition. I did track down part of the series Pam Standing at a private location here in New York. Napier has also posted video excerpts of the work online. Unfortunately, these still images and clips are a bit too static to really do the piece justice. If you get to see the real thing and if you look for a while, you’ll see what I mean. Venus 2.0 is an amazing jigsaw puzzle, a deceptive surface of shifting layers – part painting and part search result.

The effect is translucent, lyrical, mathematic and creepy. When I implied that it must have taken an awful lot of precision and calculation to get something like this to come together, Napier insisted that focusing on the technology behind Venus 2.0, its algorithm, or even on the unique materiality of networked art in general, is a way of missing the point. The way he relates to Venus 2.0 is through the painter’s hand. What counts is not the data feed, but who were are, what we want and how we behave:

“The artwork is a riff on sexuality as an elaborate hoax played by a precocious molecule that builds insanely complex sculptures out of protein, aka, meat puppets or more simply, ‘us’. These animate shells follow a script that’s written and refined over the past half billion years. And that script is in a nutshell: 1) survive 2) reproduce 3) goto #1. So sexual attraction is the carrot to DNA’s stick. Attraction is part of the machine. In art history this appears as the tradition of Venus. And more recently: Duchamp’s ‘Bride’. Magritte’s ‘Assasin’. Warhol’s ‘Marilyn’. The focus of digital art is still the human being. Don’t be distracted by the gadgets.”

Hi-tech or not, new media artists are by now well-practiced in the technique of unraveling a familiar image to expose an occluded, yet equally valid identity. In Distorted Barbie, Napier relied on a repeated process of digital degradation to blur both the literal and conceptual fiction of the Mattel franchise. Another early project stolen, took the female body apart, presenting attraction as database. Years later these kind of approaches, pixel manipulations and indexing without comment, as well as parsing and remixing have become a sort of standard operating procedure in new media. So much so that as technique becomes more well-known and more accessible, it can be hard to tell one work from another or one artist from the next. With this in mind’ it seems important to note that in the case of Venus 2.0 it is not so much a choice of subject or an organizational scheme that guarantees the final effect, but the manner in which Napier investigates the image and the ways in which Napier illustrates and manipulates both the visual elements of portraiture and his relationship to the figure involved.

Napier builds and destructs, assembles and tears apart, but in doing so tries his best to keep us focused on these activities as processes. At times Pam’s contortions are allowed to expose the wireframe which allegedly guides her construction and at certain key points on the interface, there is also a steady stream of vector coordinates to embody the idea of data. But Napier makes less of technology than another artist might by effacing what some would tend to elaborate on, in order to draw our attention elsewhere – usually towards evolution, gradation and the ways in which images evolve, and by extension the ideas that inspire those images which reflect how unstable elements are, even treacherous:

“I work at probabilities: what is the likelihood that the figure will be totally opaque? How often does it get murky and undefined? How often should I let that happen and for how long? How often does the figure jump? How long till the figure lands and stands up again? How much time is spent in chaotic motion and how much in stillness? How often does something completely messy happen? Like the figure gets tangled up in a ball that has no structure. I don’t know how the piece will play out each minute, but I know what kind of situations will arise in the piece. I can plan those likelihood, and then let the piece play and see what it does.”

So, like the woman who inspired her. Pam Standing is beautiful, but more unsettling. What makes Pamela Anderson the most frequently mentioned woman on the Internet? Why do we mention her? What is it we want when we give our gaze to celebrity? What has celebrity done to attract us? Whatever your answers, what Napier privileges in his response is not a fixed portrait of a star, but a cloud of iteration that produces its own image and reproduces that image, jangled, confused and full of surprises. A portrait whose finest moments are accidental and whose design is an accumulation of fragments that tense and dissolve, floating like clouds one instant and then sinking like lead the next. This is where the imagination takes a step forward and Napier the artist or Napier the geek becomes Napier the puppetmaster, a networked Dr. Coppelious:

“My favorite moment with the PAM series was when I finally got all the math to work so that the body part images displayed correctly and the correct side of the body showed at the right time and the stick figure suddenly started to look like a ‘real’ puppet that was starting to come alive in the golem-esque way that I thought it could when I was sketching out the idea. I had that Dr. Frankenstein moment: ‘She’s aliiiiiiiiiive!!!!!!!!!!!!!’ which was really the point all along, to create this virtual golem that is clearly not alive at all and yet teases us all with this false appearance of life. Venus 2.0.”

Until the layers of arms, legs, breasts and hips collapse in on themselves and Pam is back where she started, a manipulated beauty unable to escape presentation as a collaged monstrosity.

Note: Mark Napier has been creating network art since 1995. One of the earliest artists to deal thematically and formally with the Internet, Napier has been commissioned to create net art by the Solomon R. Guggenheim Museum and the San Francisco Museum of Modern Art. His work has appeared at the Centre Pompidou in Paris’ P.S.1 New York’ the Walker Art Center in Minneapolis’ Ars Electronica in Linz’ The Kitchen’ Kunstlerhaus Vienna’ ZKM Karlsruhe’ Transmediale’ iMAL Brussels’ Eyebeam’ the Princeton Art Museum’ la Villette’ Paris’ and at the DAM Gallery in Berlin.