Mainframe Experimentalism

Early Computing And The Foundations Of The Digital Arts

Edited by Hannah B Higgins and Douglas Kahn

University of California Press, 2012

ISBN 978-0-520-26838-8

The history of arts computing’s heroic age is a family affair in Hannah B Higgins and Douglas Kahn’s Mainframe Experimentalism. Starting with the founding legend of the FORTRAN programming workshops that one of the editors’ parents led in their New York apartment in the 60s, the book quickly broadens across continents and decades to cover the mainframe and minicomputer period of digital art. Several of the chapters are also written by children of the artists. Can they make the case that the work they grew up with is of wider interest and value?

The 1960s and 1970s were the era prior to the rise of home and micro computing, when small computers weighed as much as a fridge (before you added any peripherals to them) and large computers took up entire air conditioned offices. Mainframes cost millions of dollars, minicomputers tens of thousands at a time when the average weekly wage was closer to a hundred dollars. To access a computer you had to engage with the institutions that could afford to maintain them – large businesses and universities, and with their guardians – the programmers and system administrators who knew how to encode ideas as marks on punched cards for the computer to run.

Computers looked like unlikely tools or materials for art. The governmental, corporate and scientific associations of computers made them appear actively opposed to the individualistic and humanistic nature of mid-20th Century art. It took an imaginative leap to want to use, or to encourage others to use, computers in art making.

Flowchart for asking your boss for a raise, Georges Perec

Many people were unwilling or unable to make that leap. As Grant Taylor describes in The Soulless Usurper, “Almost any artistic endeavor(sic) associated with early computing elicited a negative, fearful, or indifferent response”(p.19). The idea, or the cognates, of computing were as powerful a force in culture as any access to actual computer hardware, a point that David Bellos makes with reference to the pataphysical bureaucratic dramas of Georges Perec’s Thinking Machines. Those wider cultural ideas, such as structuralism, could provide arts computing with the context that it sorely lacked in most people’s eyes as Edward A. Shanken argues in his discussion of the ideas behind Jack Burnham’s “Software” exhibition, an intellectual moment in urgent need of rediscovery and re-evaluation.

The extensive resources needed to access computing machinery led to clusters of activity around those institutions that could provide access to them. In Information Aesthetics and the Stuttgart School, Christoph Klütsch describes the emergence of a style and theory of art in that town (including work by Frieder Nake and Manfred Mohr) in the mid-1960s. In communist Yugoslavia the New Tendencies school at Zagreb achieved international reach with its publications and conferences as described by Margit Rosen.

Charlie Gere’s Minicomputer Experimentalism in the United Kingdom describes the institutional aftermath of the era that is the book’s focus. Like Gere I arrived at Middlesex University’s Centre For Electronic Arts in the 1990s with the knowledge that there was a long history of computer art making there. Also like Gere I encountered John Lansdowne in the hallways and regret not taking the opportunity to ask him more about his groundbreaking work.

Perhaps surprisingly, music was an early aesthetic and cultural success for arts computing. The mathematics of sound waveforms, or musical scores, were tractable to early computers that had been built to service military and engineering mathematical calculations. In James Tenney at Bell Labs, Douglas Kahn makes the case that “Text generation and digitally synthesized sound were the earliest computer processes adequate to the arts” (p.132) and argues convincingly for the genuine musical achievements of the composer’s work there. Branden W. Joseph places John Cage and Lejaren Hiller’s multimedia performance “HPSCHD”, made using the ILLIAC II mainframe, in the context of the aesthetics and the critical reactions of both, and considers how the experience may have influenced Cage’s later more authoritarian politics. Cristoph Cox, Robert A. Moog and Alvin Lucier all write about the latter’s “North American Time Capsule 1967”, a proto-glitch vocoder piece that, as someone who is not any kind of expert in that area, I didn’t feel warranted such extensive treatment.

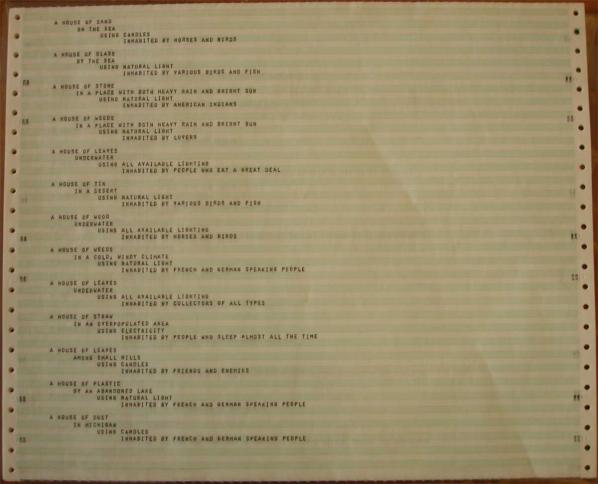

Hannah B Higgins provides An Introduction To Alison B Knowles’s House of Dust, describing it as “an early computerized poem”. It’s a good poem, later realized in physical architecture, and given extensive responses by students and other artists, that helps underwrite the claim for early arts computing’s cultural and aesthetic significance. Benjamin H.D. Buchlock describes the cultural and programmatic construction of the poem in The Book of the Future. And to jump ahead for a moment, a later extract from Dick Higgins’ 1968 pamphlet “Computers for the Arts” explains the programming techniques that programs like “House of Dust” used.

I mention this now because of the way that the extract of Higgins’ pamphlet contrasts with the version published in 1970 (available online as a PDF scan that I would urge anyone interested in the history of arts computing to find and study under academic fair use/dealing). Mainframe Experimentalism includes many wonderful examples of the output of programs, and many detailed descriptions of the construction of artworks. But the original of “Computers for the Arts” goes beyond this. It includes not just a description of the code techniques but a walk-through of the code and the actual FORTRAN IV program listings. Type these into a modern Fortran compiler and they will run (with a couple of extra compiler flags…). For all the strengths of Mainframe Experimentalism, it is this kind of incredibly rare primary source material that we also need access to, and it is a shame that where more was available it couldn’t be included.

Three Early Texts by Gustav Metzger on Computer Art collated by Simon Ford gives the reader a feel for the intellectual zeitgeist of arts computing at the turn of the 1970s, one that might surprise critics then and now with its political literacy and commitment. William Kazen brings Nam June Paik’s lesser known computer(rather than television)-based work to the foreground while tying it to the artist’s McLuhanish hopes for empowering global media.

Knowles’ poem isn’t in the section on poetry (it’s classified as Intermedia), which begins with Christopher Funkhouser’s First-Generation Poetry Generators: Establishing Foundations in Form. Funkhouser gives an excellent overview of the technologies and approaches used to create generative text in the mid 1960s, providing a wonderful selection of examples while tracking pecedents back through Mallarme to Roman times.

In Letter to Ann Noël Emmet Williams explains the process for creating a letter expanding poem that had been recreated on an IBM mainframe. Like “House of Dust” it’s an example of computer automation increasing the power of an existing technique for generating texts. Hannah B Higgins’ The Computational Word Works of Eric Andersen and Dick Higgins draws a line out of Fluxus for the artists’ Intermedia and computing work. Eric Andersen’s artist’s statement describing the lists of words and numbers used to create “Opus 1966” shows both the ingenuity and intellectual rigour that artists brought to bear on early code poetry. The inclusion of Staisey Divorski’s translation of Nanni Balestrini’s specification for “Tape Mark I” provides an example of the depth of appreciation that prepraratory sources can provide for an artwork. Mordecai-Mark Mac Low describes how his father took ideas from Zen Buddhism and negotiated the technial limitations of late 1960s computing machinery to realise them in poetic form in The Role of the Machine in the Experiment of Egoless Poetry: Jackson Mac Low and the Programmable Film Reader.

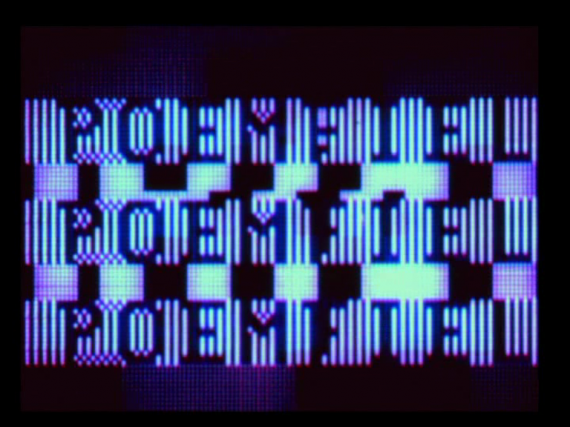

Finally, Mainframe Experimentalism turns to cinema. Gloria Sutton casts Stan VanDerBeek’s “Poemfields” in a more computational light than their usual place in media history as experimental films to be projected in the artist’s MovieDrome dome. Ending where a history of the ideas and technology of arts computing might otherwise begin, Zabet Patterson describes the triumphs and frustrations of using World War II surplus analogue computers to make films in From The Gun Controller To The Mandala: The Cybernetic Cinema of John and James Whitney. It’s a fitting finish that feels like it brings the book full circle.

I mentioned that several of the essays in Mainframe Experimentalism were written by family members of the artists. A number of the essays also overlap with their coverage of different artists, or describe encounters or influences between them. Arts computing was a small world, a genuine avant-garde. We are lucky not to have lost all memory of it, and we should be grateful to those students and family members who have kept those memories alive.

In “Computers For The Arts”, Dick Higgins describes two ways of generating output from a computer program – aleatory (randomized) or non-aleatory (iterative) ways. Christopher Funkhouser and Hannah B Higgins’s essays also touch on this difference, but forty years later. This is key to understanding computer art making not just in the mainframe era but today. Computers are good for describing mathematical spaces then exploring them step by step or (psuedo-)randomly, and whether it’s an animated GIF or a social media bot you can often see which of those processes is at work. It’s inspiring to see such fundamental and lasting principles identified and made explicit so early on.

Away from the era of the “Two Cultures” of science and the humanities, and of computing’s guilt by association with the database-driven Vietnam War, the art of Mainframe Experimentalism rewards consideration as a legitimate and valuable part of art history. Not all of it equally, and not all of it to the same degree – but that is true of all art, and cannot be used to disregard early arts computing as a whole. This aesthetically and intellectually under-appreciated moment in Twentieth Century art is crying out for a critical re-evaluation and an art historical recuperation. Mainframe Experimentalism provides ample examples of where we can start looking, and exactly why we should.

The text of this review is licenced under the Creative Commons BY-SA 4.0 Licence.