Jonas Lund’s “The Fear Of Missing Out” (2013) is a series of gallery art objects made by the artist following the instructions of a piece of software they have written. It has gained attention following a Huffington Post article titled “Controversial New Project Uses Algorithm To Predict Art “.

Art fabricated by an artist following a computer-generated specification is nothing new. Prior to modern 2D and 3D printing techniques, transcribing a computer generated design into paint or metal by hand was the only way to present artworks that pen plotters or CNC mills couldn’t capture. But a Tamagotchi-gamer or Amazon Mechanical Turk-style human servicing of machine agency where a program dictates the conception of an artwork for a human artist to realize also has a history. The principles involved go back even further to the use of games of chance and other automatic techniques in Dada and Surrealism.

What is novel about The Fear Of Missing Out is that the program dictating the artworks is doing so based on a database derived from data about artworks, art galleries, and art sales. This is the aesthetic of “Big Data“, although is not a big dataset by the definition of the term. Its source, and the database, are not publicly available but assuming it functions as specified the description of the program in the Huffington Post article about it is complete enough that we could re-implement it. To do so we would scrape Art Sales Index and/or Artsy and pull out keywords from entries to populate a database keyed on artist, gallery and sales details. Then we would generate text from those details that match a desired set of criteria such as gallery size and desired price of artwork.

What’s interesting about the text described in the Huffington Post article is that it’s imperative and specific: “place the seven minute fifty second video loop in the coconut soap”. How did the instruction get generated? Descriptions of artworks in artworld data sites describe their appearance and occasionally their construction, not how to assemble them. If it’s a grammatical transformation of scraped description text that fits the description of the project, but if it’s hand assembled that’s not just a database that has been “scraped into existence”. How did the length of time get generated? If there’s a module to generate durations that doesn’t fit the description of the project, but if it’s a reference to an existing 7.50 video it does.

The pleasant surprises in the output that the artist says they would not have thought of but find inspiring are explained by Edward de Bono-style creativity theory. And contemporary art oeuvres tend to be materially random enough that the randomness of the works produced looks like moments in such an oeuvre. Where the production differs both from corporate big data approaches and contemporary artist-as-brand approaches is that production is not outsourced. Lund makes the art that they use data to specify.

Later, the Huffington Post article mentions the difficulty of targeting specific artists. A Hirst artwork specification generator would be easy enough to create for artworks that resemble his existing oeuvre. Text generators powered by markov chains were used as a tool for parodying Usenet trolls, and their strength lies in the predictability of the obsessed. Likewise postmodern buzzword generators and paper title generators parody the idees fixes of humanities culture.

The output of such systems resembles the examples that they are derived from. Pivoting to a new stage in an artist’s career is something that would require a different approach. It’s possible to move, logically, to conceptual opposites using Douglas Hofstadter’s approaches. In the case of Hirst, cheap and common everyday materials (office equipment) become expensive and exclusive ones (diamonds) and the animal remains become human ones.

This principle reaches its cliometric zenith in Colin Martindale’s book “The Clockwork Muse: The Predictability of Artistic Change”. It’s tempting to dismiss the idea that artistic change occurs in regular cycles as the aesthetic equivalent of Kondratieff Waves as Krondatieff Waves are dismissed by mainstream economics. But proponents of both theories claim empirical backing for their observations.

In contrast to The Fear Of Missing Out’s private database and the proprietary APIs of art market sites there is a move towards Free (as in freedom) or Open Data for art institutions. The Europeana project to release metadata for European cultural collections as linked open data has successfully released data from over 2000 institutions across the EU. The Getty Foundation has put British institutions that jealously guard their nebulously copyrighted photographs of old art to shame by releasing almost 5000 images freely. And most recently the Tate gallery in the UK has released its collection metadata under the free (as in freedom) CC0 license.

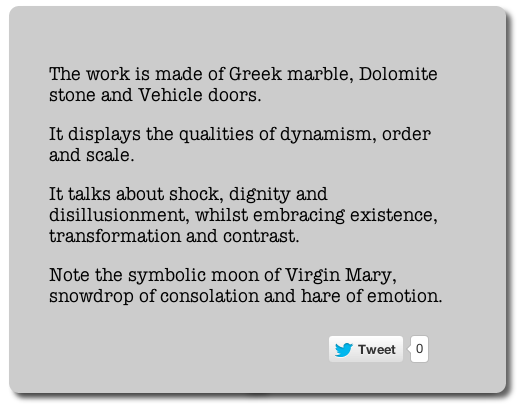

Shardcore’s “Machine Imagined Artworks” (2013) uses the Tate collection metadata to make descriptions of possible artworks. Compared to the data-driven approach of The Fear Of Missing Out, Machine Imagined Artworks is a more traditional generative program using unconstrained randomness to choose its materials from within the constrained conceptual space of the Tate data’s material and subjects ontologies.

Randomness is ubiquitous but often frowned upon in generative art circles. It gives good results but lacks intention or direction. Finding more complex choice methods is often a matter of rapidly diminishing returns, though. And Machine Imagined Artworks makes the status of each generated piece as a set of co-ordinates in conceptual space explicit by numbering it as one of the 88,577,208,667,721,179,117,706,090,119,168 possible artworks that can be generated from the Tate data.

Machine Imagined Artworks describes the formal, intentional and critical schema of an artwork. This reflects the demands placed on contemporary art and artists to fit the ideology both of the artworld and of academia as captured in the structure of the Tate’s metadata. It makes a complete description of an artwork under such a view. The extent to which such a description seems incomplete is the extent to which it is critical of that view.

We could use the output of Machine Imagined Artworks to choose 3D models from Thingiverse to mash-up. Automating this would remove human artists from the creative process, allowing the machines to take their jobs as well. The creepy fetishization of art objects as quasi-subjects rather than human communication falls apart here. There is no there there in such a project, no agency for the producer or the artwork to have. It’s the uncanny of the new aesthetic.

Software that directs or displaces an artist operationalises (if we must) their skills or (more realistically) replaces their labour, making them partially or wholly redundant. Dealing in this software while maintaining a position as an artist represents this crisis but does not embody it as the artist is still employed as an artist. Even when the robots take artists jobs, art critics will still have work to do, unless software can replace them as well.

There is a web site of markov chain-generated texts in the style of the Art & Language collective’s critical writing at http://artandlanguage.co.uk/, presumably as a parody of their distinctive verbal style. Art & Language’s painting “Gustave Courbet’s ‘Burial at Ornans’ Expressing…” (1981) illustrates some of the problems that arbitrary assemblage of material and conceptual materials cause and the limitations both of artistic intent and critical knowledge. The markov chain-written texts in their style suffer from the weakness of such approaches. Meaning and syntax evaporate as you read past the first few words. The critic still has a job.

Or do they? Algorithmic criticism also has a history that goes back several decades, to Gips and Stiny’s book “Algorithmic Aesthetics” (1978). It is currently a hot topic in the Digital Humanities, for example with Stephen Ramsay’s book “Reading Machines: Toward an Algorithmic Criticism” (2011). The achievements covered by each book are modest, but demonstrate the possibility of algorithmic critique. The problem with algorithmic critique is that it may not share our aesthetics, as the ST5 antenna shows.

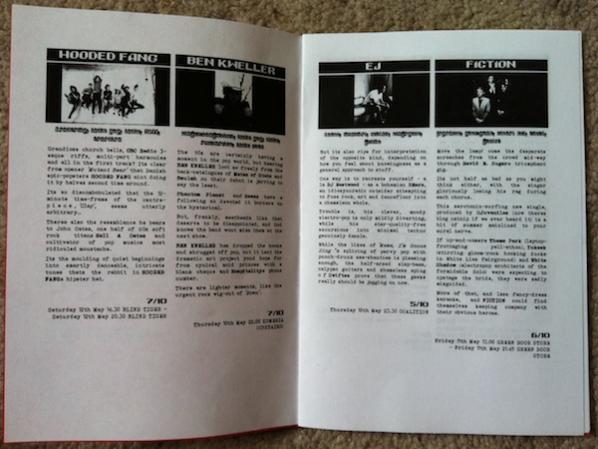

Shardcore’s “Cut Up Magazine” (2012) is a generative critic that uses a similar strategy to “The Fear Of Missing Out”. It assembles reviews from snippets of a database of existing reviews using scraped human generated data about the band such as their name, genre, and most popular songs. Generating the language of critique in this way is subtly critical of its status and effect for both its producers and consumers. The language of critique is predictable, and the authority granted to critics by their audience accords a certain status to that language. Taken from and returned to fanzines, Cut Up Magazine makes the relationship between the truth of critique, its form, and its status visible to critique.

We can use The Fear Of Missing Out-style big data approaches to create critique that has a stronger semantic relationship to its subject matter. First we scrape an art review blog to populate a database of text and images. Next we train an image classifier (a piece of software that tells you whether, for example, an image contains a Soviet tank or a cancer cell or not) and a text search engine on this database. Then we use sentiment analysis software (the kind of system that tells airlines whether tweets about them are broadly positive or negative) to generate a score of one to five stars for each review and store this in the database.

We can now use this database to find the artworks that are most similar in appearance and description to those that have already been reviewed. This allows us to generate a critical comment about them and assign them a score. Given publishing fashion we can then make a list of the results. The machines can take the critic’s job as well, as I have previously argued.

What pieces like The Fear Of Missing Out and Machine Imagined Artworks make visible is an aspect of How Things Are Done Now and how this affects everyone, regardless of the nature of their work. This is “big data being used to guide the organization”. To regard such projects simply as parody or as play acting is to take a literary approach to art. But art doesn’t need to resolve such ontological questions in order to function, and may provide stronger affordances to thought if it doesn’t. What’s interesting is both how much such an approach misses and how much it does capture. As ever, art both reveals and symbolically resolves the aporia of (in this case the Californian) ideology.

The text of this review is licenced under the Creative Commons BY-SA 3.0 Licence.